Checkout this repository for 3D Machine Learning

It is implemented using Stereo R-CNN and Stereo R-CNN is extended implementation of Faster R-CNN.

Evaluation of R-CNN:

R-CNN -> Fast R-CNN ->Faster R-CNN

- (a) Input images

(b) extract Region proposals

(c) compute CNN features - Classify Regions(using SVM)

- Bounding box(Runs simple linear regression in regional proposal to generate a tighter bounding box coordinates)

- In the first step, the input image goes through a convolution network which will output a set of convlutional feature maps on the last convolutional layer:

Furthermore, for each of these anchors, a value p∗ is computed which indicated how much these anchors overlap with the ground-truth bounding boxes.

p* is 1 if IoU > 0.7

p* is -1 if IoU < 0.3

where IoU is intersection over union and is defined below:

IoU= (Anchor ∩ GTBox)/ (Anchor ∪ GTBox)

- Finally, the 3×3 spatial features extracted from those convolution feature maps (shown above within red box) are fed to a smaller network which has two tasks: classification (cls) and regression (reg). The output of regressor determines a predicted bounding-box (x,y,w,h), The output of classification sub-network is a probability p indicating whether the predicted box contains an object (1) or it is from background (0 for no object).

-

LiDAR-based 3D Object Detection : Currently, most of the 3D object detection methods heavily rely on LiDAR data for providing accurate depth information in autonomous driving scenarios. However, LiDAR has the disadvantage of high cost, relatively short perception range (∼100 m). Gaint companies like Tesla uses this method, this costs 50% of car's cost. But Stereo RCNN method costs only 5% of car's cost.

-

Monocular-based 3D Object Detection : monocular camera provides alternative low-cost solutions for 3D object detection. The depth information can be predicted by semantic properties in scenes and object size, etc. However, the inferred depth cannot guarantee the accuracy, especially for unseen scenes. It uses one camera.

Stereo RCNN based 3D Object Detection : Comparing with monocular camera, stereo camera provides more precise depth information by left-right photometric alignment. Comparing with LiDAR, stereo camera is low-cost while achieving comparable depth accuracy for objects with non-trivial disparities. The perception range of stereo camera depends on the focal length and the baseline. It uses two or more cameras. Its kind of seeing with one eye(monocular) and seeing with two or more eyes(stereo).

Lets look how it works:

Main part we are going to discuss in this network are:

Main part we are going to discuss in this network are:

1. Stereo Regression

2. Keypoint Prediction

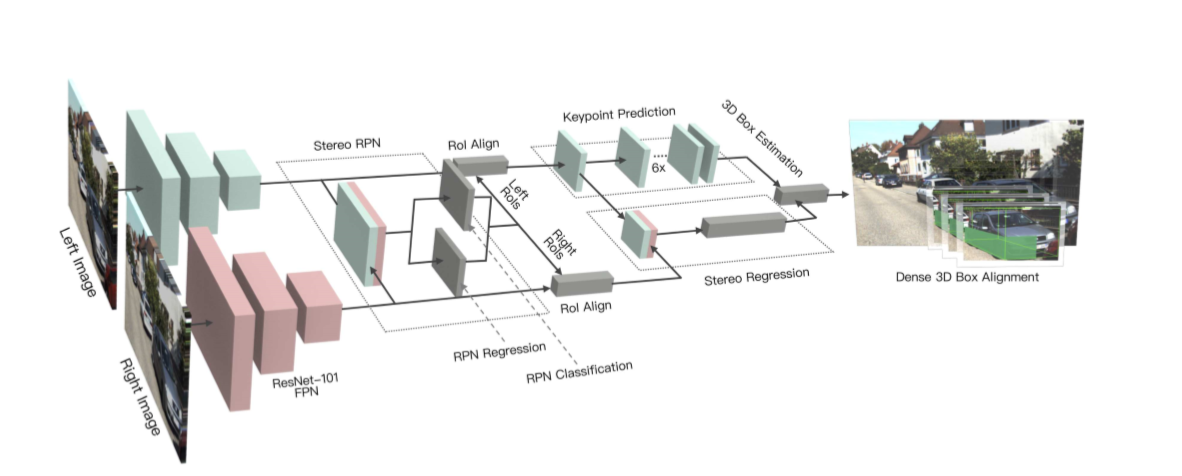

Stereo R-CNN Network Stereo R-CNN can simultaneously detect and associate 2D bounding boxes for left and right images with minor modifications. We use weight-share ResNet-101 and FPN as our backbone network to extract consistent features on left and right images. It outpups feature maps. ResNet-101 : Residual Network, which contains 101 layers. FPN : Feature Pyramid Network

Stereo RPN Region Proposal Network (RPN) is a slidingwindow based foreground detector. After feature extraction,a 3 X 3 convolution layer is utilized to reduce channel, followed by two sibling fully-connected layer to classify objectness and regress box offsets for each input location which is anchored with pre-define multiple-scale boxes. we concatenate left and right feature maps at each scale, then we feed the concatenated features into the stereo RPN network.

Stereo R-CNN :

1.Stereo Regression: After stereo RPN, we have corresponding left-right proposal pairs. We apply RoI Align on the left and right feature maps respectively at appropriate pyramid level. The left and right RoI features are concatenated and fed into two sequential fully-connected layers (each followed by a ReLU layer) to extract semantic information. We use four sub-branches to predict object class, stereo bounding boxes, dimension, and viewpoint angle respectively.

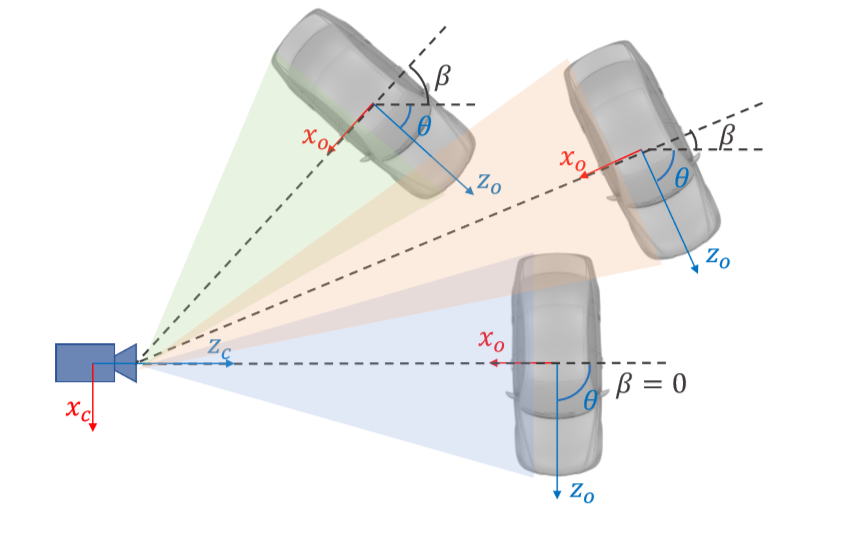

we use θ to denote the vehicle orientation respecting to the camera frame, and β to denote the object azimuth respecting to the camera center. Three vehicles have different orientations, however, the projection of them are exactly the same on cropped RoI images. We therefore regress the viewpoint angle α defined as: α = θ + β. To avoid the discontinuity, the training targets are [sinα,cosα] pair instead of the raw angle value.

we use θ to denote the vehicle orientation respecting to the camera frame, and β to denote the object azimuth respecting to the camera center. Three vehicles have different orientations, however, the projection of them are exactly the same on cropped RoI images. We therefore regress the viewpoint angle α defined as: α = θ + β. To avoid the discontinuity, the training targets are [sinα,cosα] pair instead of the raw angle value.

With stereo boxes and object dimension, the depth information can be recovered intuitively, and the vehicle orientation can also be solved by decoupling the relations between the viewpoint angle with the 3D position.

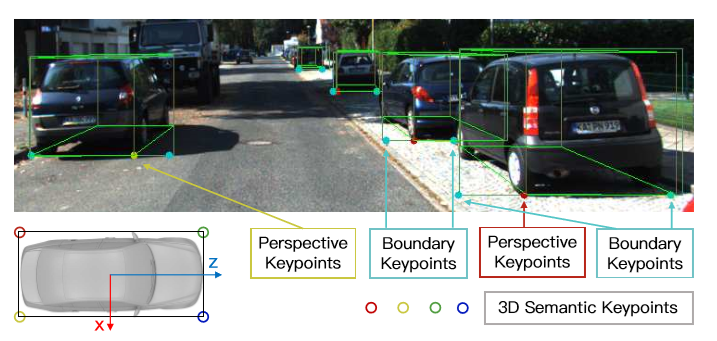

- Keypoint Prediction : Besides stereo boxes and viewpoint angle, we notice that the 3D box corner which projected in the box middle can provide more rigorous constraints to the 3D box estimation.

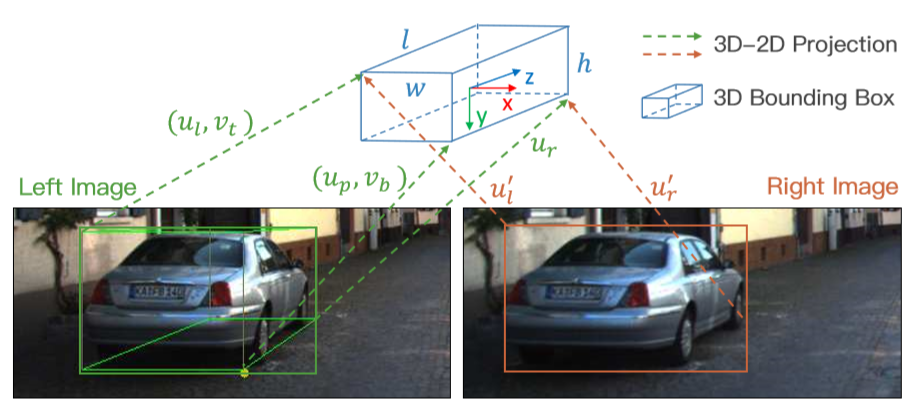

3D Box Estimation we solve a coarse 3D bounding box by utilizing the sparse keypoint and 2D box information. States of the 3D bounding box can be represented by x = {x,y,z,θ}, which denotes the 3D center position and horizontal orientation respectively. Given the left-right 2D boxes, perspective keypoint, and regressed dimensions, the 3D box can be solved by minimize the reprojection error of 2D boxes and the keypoint.

we extract seven measurements from stereo boxes and perspective keypoints: z = {ul,vt,ur,vb,u′ l,u′ r,up}, which represent left, top, right, bottom edges of the left 2D box, left, right edges of the right 2D box, and the u coordinate of the perspective keypoint.

we extract seven measurements from stereo boxes and perspective keypoints: z = {ul,vt,ur,vb,u′ l,u′ r,up}, which represent left, top, right, bottom edges of the left 2D box, left, right edges of the right 2D box, and the u coordinate of the perspective keypoint.

vt = (y − h 2)/(z − w 2 sinθ − l 2cosθ),

ul = (x − w 2 cosθ − l 2sinθ)/(z + w 2 sinθ − l 2cosθ),

up = (x + w 2 cosθ − l 2sinθ)/(z − w 2 sinθ − l 2cosθ),

u′r = (x − b + w 2 cosθ + l 2sinθ)/(z − w 2 sinθ + l 2cosθ).

Truncated edges are dropped on above seven equations. These multivariate equations are solved via Gauss-Newton method.

This project contains the implementation of CVPR 2019 paper arxiv.

Code and instructions are taken from here

Authors: Peiliang Li, Xiaozhi Chen and Shaojie Shen from the HKUST Aerial Robotics Group, and DJI.

@inproceedings{licvpr2019,

title = {Stereo R-CNN based 3D Object Detection for Autonomous Driving},

author = {Li, Peiliang and Chen, Xiaozhi and Shen, Shaojie},

booktitle = {CVPR},

year = {2019}

}

This implementation is tested under Pytorch 1.0.0. To avoid affecting your Pytorch version, we recommend using conda to enable multiple versions of Pytorch.

0.0 Install Pytorch

conda create -n env_stereo python=3.6

conda activate env_stereo

conda install pytorch=1.0.0 cuda90 -c pytorch

conda install torchvision -c pytorch

0.1 Other Dependencies

git clone git@github.com:HKUST-Aerial-Robotics/Stereo-RCNN.git

cd stereo_rcnn

git checkout 1.0

pip install -r requirements.txt

0.2 Build

cd lib

python setup.py build develop

cd ..

1.0. Set the folder for placing the model

mkdir models_stereo

1.1. Download our trained weight Google Drive and put it into models_stereo/, then just run

python demo.py

If everything goes well, you will see the detection result on the left, right and bird's eye view image respectively.