A curated list of awesome structural bioinformatics frameworks, libraries, software and resources.

So let it not look strange if I claim that it is much easier to explain the movement of the giant celestial bodies than to interpret in mechanical terms the origination of just a single caterpillar or a tiny grass. - Immanuel Kant, Natural History and the Theory of Heaven, 1755

Books on Cheminformatics, Bioinformatics, Quantum Chemistry strangle the subject to sleep 😴 and command a wild price 🤑 for the naps they induce.

Want a better way to learn than some random repo on github?

Spend 4-12 years of your life and hundreds of thousands of dollars chasing a paper with a stamp on it 🥇.

Or feed yourself 🍼.

Information should be cheap, fast enjoyable, silly, shared, disproven, contested, and most of all free.

Knowledge hodlers, and innovation stifflers are boring and old. This is for the young of mind and young of spirit 🚼 that love to dock & fold.

Structure-function relationships are the fundamental object of knowledge in protein chemistry; they allow us to rationally design drugs, engineer proteins with new functions, and understand why mutations cause disease. - On The Origin of Proteins

There is now a testable explanation for how a protein can fold so quickly: A protein solves its large global optimization problem as a series of smaller local optimization problems, growing and assembling the native structure from peptide fragments, local structures first. - The Protein Folding Problem

The protein folding problem consists of three closely related puzzles:

- (a) What is the folding code?

- (b) What is the folding mechanism?

- (c) Can we predict the native structure of a protein from its amino acid sequence? source

- 💻 Code

- 📖 Paper 2

- 📖 Paper

- 📰 article

- AlpahFold 14 Results Discussion

- What AlphaFold means for Structural BioInformatics

- AlphaFold 2 Explained - Yanick Video

- Illustrated Transformer

- Transformers from Scratch

- 💾 Code

- 💾 Code - Prospr - Open Source Implementation

- 📖 Prospr Paper

- AlphaFold @ Casp13: What Just Happened?

MiniFold - Open Source toy example of AlphaFold 13 algorithm

The DeepMind work presented @ CASP was not a technological breakthrough (they did not invent any new type of AI) but an engineering one: they applied well-known AI algorithms to a problem along with lots of data and computing power and found a great solution through model design, feature engineering, model ensembling and so on...

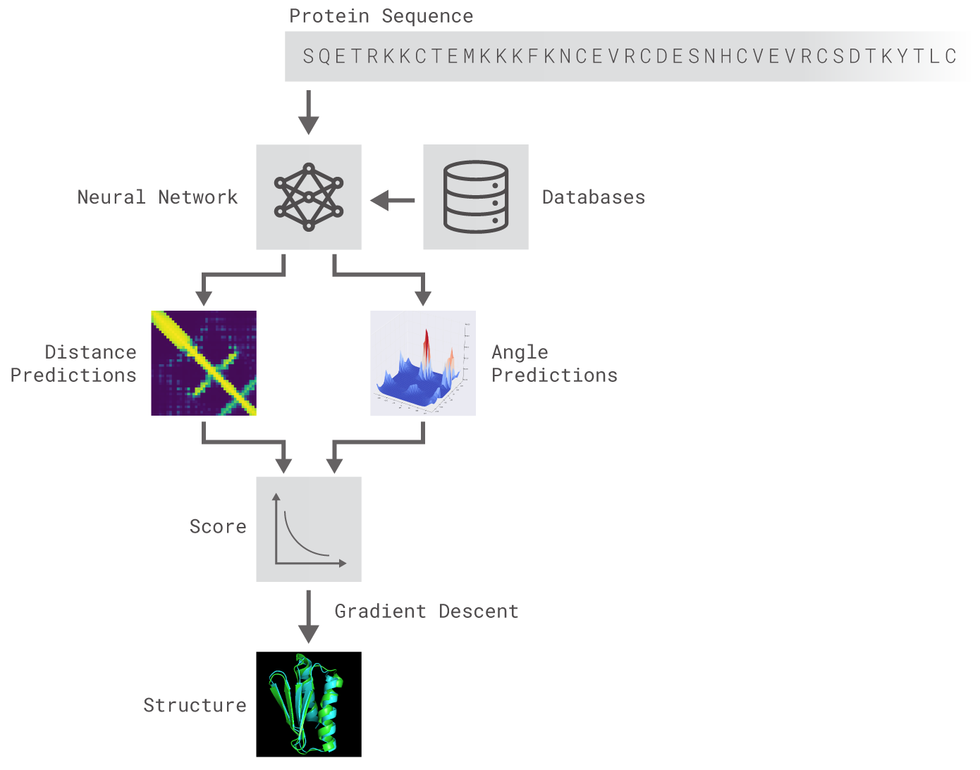

Based on the premise exposed before, the aim of this project is to build a model suitable for protein 3D structure prediction inspired by AlphaFold and many other AI solutions that may appear and achieve SOTA results.

Two different residual neural networks (ResNets) are used to predict angles between adjacent aminoacids (AAs) and distance between every pair of AAs of a protein. For distance prediction a 2D Resnet was used while for angles prediction a 1D Resnet was used.

As deep learning algorithms drive the progress in protein structure prediction, a lot remains to be studied at this merging superhighway of deep learning and protein structure prediction. Recent findings show that inter-residue distance prediction, a more granular version of the well-known contact prediction problem, is a key to predicting accurate models. However, deep learning methods that predict these distances are still in the early stages of their development. To advance these methods and develop other novel methods, a need exists for a small and representative dataset packaged for faster development and testing. In this work, we introduce protein distance net (PDNET), a framework that consists of one such representative dataset along with the scripts for training and testing deep learning methods. The framework also includes all the scripts that were used to curate the dataset, and generate the input features and distance maps.

Tools for exploring how two or more molecular structures fit together

AutoDock - suite of automated docking tools designed to predict how small molecules bind to a receptor of known 3D structure

AutoDock Vina - significantly improves the average accuracy of the binding mode predictions compared to AutoDock

Gnina - deep learning framework for molecular docking -inside deepchem (/dock/pose_generation.py)

GOMoDo - GPCR online modeling and docking server

Smina used for minimization (local_only) as opposed to of docking, makes Vina much easer to use and 10-20x faster. Docking performance is about the same since partial charge calculation and file i/o isn't such a big part of the performance.

"Docking is a method which predicts the prefered orientation of one molecule to a second when bound to each other to form a stable complex. Knoweldge of the prefered orientation in turn may be used to predict the strength of association or binding affinity between two molecules using scoring functions."

-

Pose - A conformation of the receptor and ligand molecules showing some intermolecular interactions (which may include hydrogen bonds as well as hydrophobic contacts

-

Posings - The process of searching for a pose in which there are favorable interactions between the receptor and the ligand molecules.

-

Scoring - The process of evaluating a particular pose using a number of descriptive features like number of intermolecular interactions including hydrogen bonds and hydrophobic contacts.

-

The best docking algorithm should be the one with the best scoring function and the best searching algorithm source

-

No single docking methods performs well for all targets and the quality of docking results is highly dependent on the ligand and binding site of interest source

In the early 1990s many approved HIV protease inhibitors were developed to target HIV infections using structure-based molecular docking. source

- Saquinavir

- Amprenavir

One of the first appearances of Molecular Docking is said to have been this 1982 paper.

They tell us Molecular Docking = "To position two molecules so that they interact favorably with one another..."

How???

Our approach is to reduce the number of degrees of freedom using simplifying assumptions that still retain some correspondence to a situation of biochemical interest. Specifically, we treat the geometric (hard sphere) interactions of two rigid bodies, where one body (the “receptor”) contains “pockets” or “grooves” that form binding sites for the second object, which we will call the “ligand”. Our goal is to fix the six degrees of freedom (3 translations and 3 orientations) that determine the best relative positions of the two objects.

Does the program reproduce known ligand-receptor geometries? If so, does it also provide alternative structures that are geometrically reasonable? To these ends, we have examined two systems for which the ligand receptor geometry has been established by crystallographic means.

What is the result of this Docking?

(1) Structures quite near the “correct” structures are readily recovered and identified as feasible solutions. (2) Other families of structures are found that are geometrically reasonable and that can be tested by simple scoring schemes, chemical intuition, or visual inspection with computer graphics.

Without allowing molecular flexibility, many aspects of ligand-receptor interactions are not properly described.

A common approach to docking combines a scoring function with an optimization algorithm. The scoring function quantifies the favorability of the protein-ligand interactions in a single pose, whichcan be conceptualized as a point in a continuous conformation space. A stochastic global optimization algorithm is used to explore and sample this conformation space. Then, local optimization is employed on the sampled points, usually by iteratively adjusting the pose in search of a local extremum of the scoring function. Ideally, the scoring function is differentiable to support efficient gradient-based optimization.

The information obtained from the docking technique can be used to suggest the binding energy, free energy and stability of complexes. At present, docking technique is utilized to predict the tentative binding parameters of ligand-receptor complex beforehand.

There are various databases available, which offer information on small ligand molecules such as CSD (Cambridge Structural Database), ACD (Available Chemical Directory), MDDR (MDL Drug Data Report) and NCI (National Cancer Institute Database).

There are two common approaches to building a score function:

- potentials of mean force

- often called statistics- or Boltzmann-based force fields

- measuring distance as a reflection of statistical tendencies within proteins

- . One takes a large set of proteins, collects statistics and converts them to a score function. One then expects this function to work well for proteins not included in its parameterisation.

- an optimization calculation

- select underlying basis function

- quasi-Lennard-Jones

- various sigmoidal functions

- We can say that the correct structure is whatever is given in the protein data bank, but unfortunately, there is almost an infinity of incorrect structures for a sequence and one would like the score function to penalize all of them

- One way to encode this idea is to adopt a statistical approach and try to consider the distribution of incorrect structures source

- select underlying basis function

Allowing gaps and insertions at any position and of any length leads to a combinatorial explosion of possibilities. The calculation can be made tractable by restricting the search space and forbidding gaps except in recognised loops in template structures.

There is a score function and a fast method for producing the best possible sequence to structure alignments and thus the best models possible. Unfortunately, the problem is still not solved,

Scoring Functions in MD can be categorized into:

-

knowledge based - stastical potentials, frequency of interaction occurance, Boltzmann distribution, dataset dependent

-

force-field based - energy functions via molecular mechanics, coulombic interactions, van der Waals interactions (Lennard-Jones potential) * CHARMM (chemistry at Harvard macromolecular mechanics) * AMBER (assisted model building and energy refinement)

-

empirical - binding free energy calculated as the weighted sum of unccorrelated terms,(example - hydrogen bonds, hydrophobicity), Regression analysis find the best weights for each term * HYDE (part of BioSolveIT tools) * ChemScore * SCORE

-

consensus - combines scoring functions types into ensemble

* X-CSCORE * MultiScore

CATH/Gene3D - 151 Million Protein Domains Classified into 5,481 Superfamilies

NCBI Conserved Domains Database - resource for the annotation of functional units in proteins

Scop 2 - Structural Classification of Proteins

UniProt - comprehensive, high-quality and freely accessible resource of protein sequence and functional information.

ChimPipe - ChimPipe is a computational method for the detection of novel transcription-induced chimeric transcripts and fusion genes from Illumina Paired-End RNA-seq data. It combines junction spanning and paired-end read information to accurately detect chimeric splice junctions at base-pair resolution.

DeepNF - Deep network fusion for protein function prediction | 📖 paper

DeepPrior - predicts the probability of a gene fusion being a driver of an oncogenic process by directly exploiting the amino acid sequence of the fused protein, and it can prioritize gene fusions from different tumors. Unlike state-of-the-art tools, it also supports easy retraining and re-adaptation of the model | 📖 paper

DeFuse - gene fusion discovery using RNA-Seq data. The software uses clusters of discordant paired end alignments to inform a split read alignment analysis for finding fusion boundaries | 📖 paper

FusionCatcher - Finder of somatic fusion-genes in RNA-seq data

Jaffa - JAFFA is a multi-step pipeline that takes either raw RNA-Seq reads, or pre-assembled transcripts, then searches for gene fusions

StarFusion | 📖 paper

The 5th Annual Fusion Protein Therapeutics Conference

Fusion Oncoproteins in Childhood Cancers (FusOnC2) Consortium

Human genome = 20K+ genes 👖 each responsible for the instructions of building a single protein; encoded within 6 feet of DNA.

DNA 🧬 is the 'instruction manual of life' (thankfully not written by Ikea 🪑 )

Life is encoded in digital form G/C || T/A nucleotide base pairs.

DNA is encoded ('transcribed) into mRNA and then decoded ('translated) into Proteins. Along the way alot of good and bad stuff happens in the latent space.

To make the DNA -> RNA transcribing happen - RNA takes a DNA strands - the template strand that runs from 3' to 5' (prime) - and it is uses to form single stranded RNA with all T's replaced by U's. (Thymine replaced by Uracil). The RNA now runs 5' to 3' prime and is identical to the non-template strand DNA sequence (the coding strand), except again Thymine is replaced by Uracil.

import Bio

from Bio.Seq import Seq

dna = Seq("ACGTTTATCGATCGA")

mRNA = dna.transcribe()

protein = mRNA.translate()

print(dna)

print(mRNA)

print(protein)

>>> ACGTTTATCGATCGA

>>> ACGUUUAUCGAUCGA

>>> TFIDRFrom the template strand -> RNA strand transcription, translation happens when RNA is turned into proteins via the reading of codons - three letter RNA sequences that encode into specific amino acids. The tranlation looks like this codon table:

Genes come in Alleles, the variations of the gene (example gene = hair color, allele = red hair 👩🦰 || blonde hair 👱♂️)

Geneomics is the study of all genes in an organism to understand their molecular organization, function, interaction and evolutionary history.

Genomics begins with the discoveries of Gregor Mendel

Deep Variant - analysis pipeline that uses a deep neural network to call genetic variants from next-generation DNA sequencing data

NVIDIA Clara Parabricks Pipelines - perform secondary analysis of next generation sequencing (NGS) DNA and RNA data, blazing fast speeds and low cost. Can analyze whole human genomes in about 45 minutes. Includes Deep Variant.

- AWS Clara Parabrick Pipeline Setup ~ $4 per hour.

BioWasm - WebAssembly modules for genomics

FastQ Bio - An interactive web tool for quality control of DNA sequencing data

Minimap2 - sequence alignment program that aligns DNA or mRNA sequences against a large reference database. For >100bp Illumina short reads, minimap2 is three times as fast as BWA-MEM and Bowtie2, and as accurate on simulated data. | paper

gBWT - graph extension (gPBWT) of the positional Burrows-Wheeler transform (PBWT)

VG - tools for working with genome variation graphs

Cello - Genetic Circuit Design

🍼 Genome in a Bottle - develop the technical infrastructure (reference standards, reference methods, and reference data) to enable translation of whole human genome sequencing to clinical practice and innovations in technologies.

Online Needleman-Wunsch Example || Example II || Great NW Colab

💭 Rosalind

💭 Great Introduction to BioInformatics Course - ELB19F

💭 Learn BioInformatics in the Browser - Sandbox Bio

☁️ Biological Modeling - Free Online Course

☁️ BioInformatic Algorithms Lecture Videos

(2021) Highly accurate protein structure prediction with AlphaFold

(2021) JANUS: Parallel Tempered Genetic Algorithm Guided by Deep Neural Networks for Inverse Molecular Design --> 💻 code

(2021) Using Gans With Adaptive Training Data to search for new molecules

(2021) Quantum Generative Models for Small Molecule Drug Discovery --> 💻 QuantumGan code

(2021) Machine learning designs non-hemolytic antimicrobial peptides

(2021) Few-Shot Graph Learning for Molecular Property Prediction --> 💻 code

(2021) Assigning Confidence To Molecular Property Prediction

(2020) Machine learning and AI-based approaches for bioactive ligand discovery and GPCR-ligand recognition

(2020) A Turing Test For Molecular Generation

(2020) Mol-CycleGAN: a generative model for molecular optimization --> 💻 code

(2020) Protein Contact Map Denoising Using Generative Adversarial Networks --> 💻 ContactGAN code

(2020) Hierarchical Generation of Molecular Graphs using Structural Motifs --> 💻 code

(2020) Deep Learning for Prediction and Optimization of Fast-Flow Peptide Synthesis

(2020) Curiosity in exploring chemical space: Intrinsic rewards for deep molecular reinforcement learning

(2020) High-Throughput Docking Using Quantum Mechanical Scoring

(2020) Deep Learning Methods in Protein Structure Prediction

(2020) GraSeq: Graph and Sequence Fusion Learning for Molecular Property Prediction --> 💻 code

(2020) Revealing cytotoxic substructures in molecules using deep learning

(2020) ChemBERTa: Large-Scale Self-Supervised Pretraining for Molecular Property Prediction

(2019)From Machine Learning to Deep Learning: Advances in scoring functions for protein-ligand docking

(2019) Deep Learning Enables Rapid Identification of Potent DDR1 Kinase Inhibitors --> 💻 GENTRL code

(2019) Junction Tree Variational Autoencoder for Molecular Graph Generation --> 💻 Code

(2019) SMILES Transformer: Pre-trained Molecular Fingerprint for Low Data Drug Discovery --> code --> 💻 code

(2019) Molecular Property Prediction: A Multilevel Quantum Interactions Modeling Perspective --> 💻 code --> 💻 more code

(2019) SMILES-BERT: Large Scale Unsupervised Pre-Training for Molecular Property Prediction

(2019) eToxPred: a machine learning-based approach to estimate the toxicity of drug candidates --> 💻 eToxPred code

(2018) Seq3Seq Fingerprint: Towards End-to-end Semi-supervised Deep Drug Discovery --> 💻 code

(2018) Chemi-Net: A molecular graph convolutional network for accurate drug property prediction

(2018) DeepFam: deep learning based alignment-free method for protein family modeling and prediction

(2018) (MOSES): A Benchmarking Platform for Molecular Generation Models --> 💻 code

(2018) DeepSMILES: An adaptation of SMILES for use in machine-learning of chemical structures --> 💻 code

(2017) Protein-Ligand Scoring with CNN

(2017) Quantum-chemical insights from deep tensor neural networks

(2016) Incorporating QM and solvation into docking for applications to GPCR targets

(2014) MRFalign: Protein Homology Detection through Alignment of Markov Random Fields

(2012) Molecular Docking: A powerful approach for structure-based drug discovery

(2011) The structural basis for agonist and partial agonist action on a β(1)-adrenergic receptor

(2011) Molecular Dynamics Simulations of Protein Dynamics and their relevance to drug discovery

(2009) Amphipol-Assisted in Vitro Folding of G Protein-Coupled Receptors

(2005) GPCR Folding and Maturation from The G Protein-Coupled Receptors Handbook

(1996) Double-mutant cycles: a powerful tool for analyzing protein structure and function

(1982) A Geometric Approach to MacroMolecule Ligand Interactions

(2021) Re-identification of individuals in genomic datasets using public face images

(2021) Accurate, scalable cohort variant calls using DeepVariant and GLnexus

(2021) Ten simple rules for conducting a mendelian randomization study

(2020) Secure large-scale genome-wide association studies using homomorphic encryption

(2020) Optimized homomorphic encryption solution for secure genome-wide association studies

(2020) Genetic drug target validation using Mendelian randomisation

(2020) Guidelines for performing Mendelian randomization investigations

(2020) The use of Mendelian randomisation to identify causal cancer risk factors: promise and limitations

(2020) A robust and efficient method for Mendelian randomization with hundreds of genetic variants

(2019) Learning Causal Biological Networks With the Principle of Mendelian Randomization

(2018) A universal SNP and small-indel variant caller using deep neural networks

(2018) Secure genome-wide association analysis using multiparty computation

(2018) Minimap2: pairwise alignment for nucleotide sequences

(2018) Reading Mendelian randomisation studies: a guide, glossary, and checklist for clinicians

(2016) The sequence of sequencers: the history of sequencing dna

(2015) Mendelian Randomization: New Applications in the Coming Age of Hypothesis-Free Causality

(2014) MeRP: a high-throughput pipeline for Mendelian randomization analysis

(2008) Mendelian randomization: Using genes as instruments for making causal inferences in epidemiology

(2007) Capitalizing on Mendelian randomization to assess the effects of treatments

- Annoy - the standard in production nearest neighbor

- gRPC - connect your devices binary like

- Jax - the future? of domain specific ML compiling?

- Kubernetes - make all your informatics container orchestration declarative

- ONNX - make all your models interoperable

- ONNX Runtime - speed up your informatic inference

- Polars - everyone learns the hard way that Pandas doesn't cut it in the real world. Its like Arrow only Rusty.

High Performance Computing (HPC) is often talked about as essential technology for the future and present of Bio/Chem Informatics. At its core HPC is now and forever really a special case of distributed computing.

Though there have been projects like Fold@home it is highly likely that a lack of innovation in distributed informatics computing (and decentralization as well) will continue to hinder the progress of many grand challenges in this field.

AlphaFold revealed not the solution to folding but instead how the real problem is modelling dynamic rather than static protein events and just how far there is left to go before the surface of the problem is even scratched. One on hand, we could wait potentially forever for Quantum Computers to prove themselves useful - or on the other the juice can be squeezed out of parrellel and distributed computing.

(2022) Deep distributed computing to reconstruct extremely large lineage trees

(2020) Bioinformatics Application with Kubeflow for Batch Processing in Clouds - together, Docker and Kubernetes become universal platforms for Infrastructure-as-a-Service (IaaS) for Bioinformatics pipelines and other workloads. Most of Bioinformatics pipelines assume local access to POSIX-like file systems for simplicity.

(1996) RFC 1958 Architectural Principles of the Internet

In a distributed cloud, services are located or ‘distributed’ to specific locations to reduce latency and these services enjoy a single, consistent control place across public and private cloud environments

AlphaFold2 is Google's state of the art protein structure prediction model.

AF2 predicts 3D coordinates of all atoms of a protein, using the amino acid sequence and aligned sequences homology.

- PreProcessing

- Input Sequence

- Multiple Sequence Alignments

- Structural Templates

- Transformer (EvoFormer)

- Recycling

- Structure Module -> 3D coordinates

def softmax_cross_entropy(logits, labels):

loss = -jnp.sum(labels * jax.nn.log_softmax(logits), axis=-1)

return jnp.asarray(loss)

If you didn't know jax's nn.logsoftmax AF2's implemenation would not mean much to you.

So going down the rabbit hole in Jax's nn we have the softmax function:

(The LogSoftmax function, rescales elements to the range )

def log_softmax(x: Array, axis: Optional[Union[int, Tuple[int, ...]]] = -1) -> Array:

shifted = x - lax.stop_gradient(x.max(axis, keepdims=True))

return shifted - jnp.log(jnp.sum(jnp.exp(shifted), axis, keepdims=True))The accepted arguments are:

- x : input array

- axis: the axis or axes along which the

log_softmaxshould be computed. Either an integer or a tuple of integers.

and an array is returned.

Inside this function we go further down the lane to:

lax.stop_gradient- is the identity function, that is, it returns argumentxunchanged. However,stop_gradientprevents the flow of gradients during forward or reverse-mode automatic differentiation.

def stop_gradient(x):

def stop(x):

if (dtypes.issubdtype(_dtype(x), np.floating) or

dtypes.issubdtype(_dtype(x), np.complexfloating)):

return ad_util.stop_gradient_p.bind(x)

else:

return x # only bind primitive on inexact dtypes, to avoid some staging

return tree_map(stop, x)This in turn relies upon tree_map

def tree_map(f: Callable[..., Any], tree: Any, *rest: Any,

is_leaf: Optional[Callable[[Any], bool]] = None) -> Any:

leaves, treedef = tree_flatten(tree, is_leaf)

all_leaves = [leaves] + [treedef.flatten_up_to(r) for r in rest]

return treedef.unflatten(f(*xs) for xs in zip(*all_leaves))jnp.logjnp.sumjnp.exp

Automatic Differentiation Lecture Slides

Chemisty 2E - ![]() Equivalent to 201 & 202 Level Chemistry Book

Equivalent to 201 & 202 Level Chemistry Book

Chemistry: Atoms First 2E ![]() Fork of 2E but not with more Atoms!!!!

Fork of 2E but not with more Atoms!!!!

Biology 2E 👽 Like Chemistry 2E but Biology

Artificial Intelligence: A Modern Approach 🤖 The Gospel of Machine Learning

Neural Networks and Deep Learning 🤖 Michael Nielsen writes another masterpiece - About Deep Learning - if you are into that sort of thing.

Reinforcement Learning 🤖 The only book you need on the subject

Pattern Recognition and Machine Learning 🤖 Another classic banger