TransGrasp: Grasp Pose Estimation of a Category of Objects by

Transferring Grasps from Only One Labeled Instance

Hongtao Wen*, Jianhang Yan*, Wanli Peng†, Yi Sun

Dalian University of Technology

* equal contributions † corresponding author

project page | arXiv | Springer Link | Youtube

This is the official repo of "TransGrasp: Grasp Pose Estimation of a Category of Objects by Transferring Grasps from Only One Labeled Instance" (ECCV 2022).

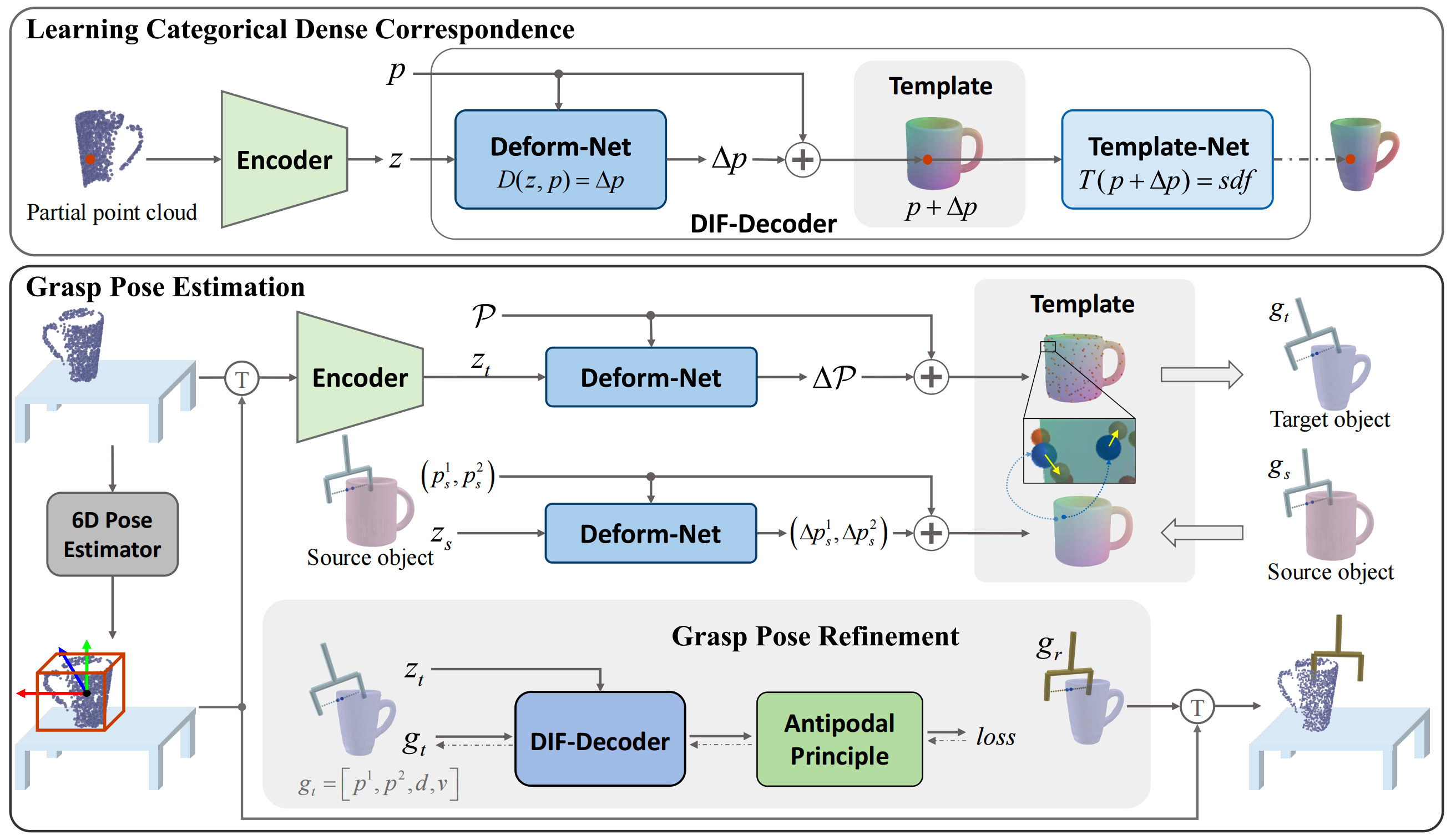

Grasp pose estimation is an important issue for robots to interact with the real world. However, most of existing methods require exact 3D object models available beforehand or a large amount of grasp annotations for training. To avoid these problems, we propose TransGrasp, a category-level grasp pose estimation method that predicts grasp poses of a category of objects by labeling only one object instance. Specifically, we perform grasp pose transfer across a category of objects based on their shape correspondences and propose a grasp pose refinement module to further fine-tune grasp pose of grippers so as to ensure successful grasps. Experiments demonstrate the effectiveness of our method on achieving high-quality grasp with the transferred grasp poses.

-

Ubuntu 18.04 or 20.04

-

Python 3.7

-

PyTorch 1.8.1

-

torchmeta 1.8.0

-

Isaac Gym 1.0.preview2 (Google | Baidu:lf30)

-

NVIDIA driver version >= 460.32

-

CUDA 10.2

Use conda to create a virtual environment, installing the essential packages:

conda create -n transgrasp python=3.7

conda activate transgrasp

cd path/to/TransGrasp

conda install pytorch==1.8.1 torchvision==0.9.1 cudatoolkit=10.2 -c pytorch

conda install -c fvcore -c iopath -c conda-forge fvcore iopath

conda install pytorch3d -c pytorch3d

pip install -r requirements.txt

# Install Isaac Gym (isaacgym/docs/install.html):

cd path/to/isaacgym/python

pip install -e .

# For Ubuntu 20.04, which does not have a libpython3.7 package, you need to set the LD_LIBRARY_PATH variable appropriately:

export LD_LIBRARY_PATH=/home/you/anaconda3/envs/transgrasp/lib-

Download pre-aligned mesh models from ShapeNetCore dataset.

-

Download source meshes and grasp labels for mug, bottle and bowl categories (Google | Baidu:7qcd) from ShapeNetSem and ACRONYM dataset.

-

Set the parameters in

scripts/preprocess_data_*.shaccording to the practical cases before runnig the following commands for data preparation.(Here we take mug as an example.)

# For inference only, run: sh scripts/preprocess_data_eval.sh mug # For training & inference, run: sh scripts/preprocess_data.sh mug

-

To train & evaluate DIF-Decoder, run the following commands.

python DIF_decoder/train.py \ --config DIF_decoder/configs/train/mug.yml python DIF_decoder/evaluate.py \ --config DIF_decoder/configs/eval/mug.yml

-

To train Shape Encoder, run the following commands.

python shape_encoder/write_gt_codes.py \ --category mug \ --config DIF_decoder/configs/generate/mug.yml python shape_encoder/train.py --category mug --model_name mug

-

To train 6D Pose Estimator, run the following command.

python pose_estimator/train.py --category mug --model_name mug

Before inference, train the networks mentioned above or just download our pretrained models (Google | Baidu:6urd). And arrange the pretrained models as follows:

|-- TransGrasp

|-- DIF_decoder

|-- logs

|-- bottle

|-- bowl

|-- mug

|-- checkpoints

|-- model.pth

|-- shape_encoder

|-- output

|-- bottle

|-- bowl

|-- mug

|-- checkpoints

|-- model.pth

|-- pose_estimator

|-- output

|-- bottle

|-- bowl

|-- mug

|-- checkpoints

|-- model.pth

Additionally, please download the assets we used for Isaac Gym (Google | Baidu:jl7r) before putting the assets folder under ./isaac_sim/.

Then, you can run the commands below to generate grasp poses following the procedures in our paper.

-

Grasp Representation. Run the following command to convert grasp representation, filter source grasps and transfer grasps on source model to the Template Field.

sh scripts/preprocess_source_grasps.sh mug

-

Grasp Transfer. Run the following command to transfer grasps on the Template Field to target models.

ENCODER_MODEL_PATH=./shape_encoder/output/mug/checkpoints/model.pth python grasp_transfer/transfer_main.py \ --category mug \ --shape_model_path ${ENCODER_MODEL_PATH} -

Grasp Refinement. Run the following command to refine the grasps transferred from the source model.

python grasp_transfer/refine_main.py --category mug

-

Grasp Pose Estimation with the selected best grasp. After running the following command, the selected grasps will be saved in

grasp_data/mug_select/.ENCODER_MODEL_PATH=./shape_encoder/output/mug/checkpoints/model.pth POSE_MODEL_PATH=./pose_estimator/output/mug/checkpoints/model.pth python grasp_transfer/main.py \ --category mug \ --shape_model_path ${ENCODER_MODEL_PATH} \ --pose_model_path ${POSE_MODEL_PATH}

-

Ablation Study. The experiments include Direct Mapping (+refine) and Grasp Transfer (+refine). Results will be saved in

./isaac_sim/results/.# Parameters: 1. category_name 2. exp_name sh scripts/run_ablations.sh mug mugTransfer Success Rate (%) for each category in main paper :

Method Mug Bottle Bowl Average Direct Mapping 60.68 79.81 33.27 57.92 Direct Mapping w/ Refine 59.26 79.86 34.83 57.98 Grasp Transfer 82.67 86.93 46.24 71.95 Grasp Transfer w/ Refine 87.77 87.24 61.52 78.84 -

Comparison with other grasp pose estimation methods. Run the following commands to test the best grasp for every object generated by our proposed TransGrasp.

Note that Isaac Gym performs convex decomposition for mesh during the first simulation, which takes a long time. Please be patient.

python isaac_sim/sim_all_objects_best_grasp.py \ --category mug \ --pkl_root grasp_data/mug_select

Grasp Success Rate (%) for each category in main paper :

Method Mug Bottle Bowl Average TransGrasp 86.67 88.00 72.84 82.50

This repo is based on DIF-Net, PointNet, and object-deformnet. Many thanks for their excellent works.

@inproceedings{wen2022transgrasp,

title={TransGrasp: Grasp pose estimation of a category of objects by transferring grasps from only one labeled instance},

author={Wen, Hongtao and Yan, Jianhang and Peng, Wanli and Sun, Yi},

booktitle={European Conference on Computer Vision},

pages={445-461},

year={2022},

organization={Springer}

}Our code is released under MIT License.