CAUTION: If you plan on running a specific branch, please read the README.md in that branch. This README.md is only valid for the current branch.

- Overview

- Structure, Interfaces and Flow of Information

- List of Controllers, Primitives, ECs

- Hardware Dependencies

- Install

- Usage

- Examples

This planning framework generates contact-rich motion sequences to grasp objects. Within this planning framework, we propose a novel view of grasp planning that centers on the exploitation of environmental contact. In this view, grasps are sequences of constraint exploitations, i.e. consecutive motions constrained by features in the environment, ending in a grasp. To be able to generate such grasp plans, it becomes necessary to consider planning, perception, and control as tightly integrated components. As a result, each of these components can be simplified while still yielding reliable grasping performance. This implementation is based on:

Clemens Eppner and Oliver Brock. "Planning Grasp Strategies That Exploit Environmental Constraints"

Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), pp. 4947 - 4952, 2015.

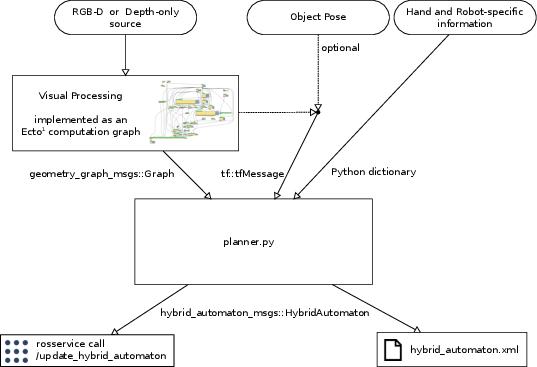

This is the structure of the planning framework:

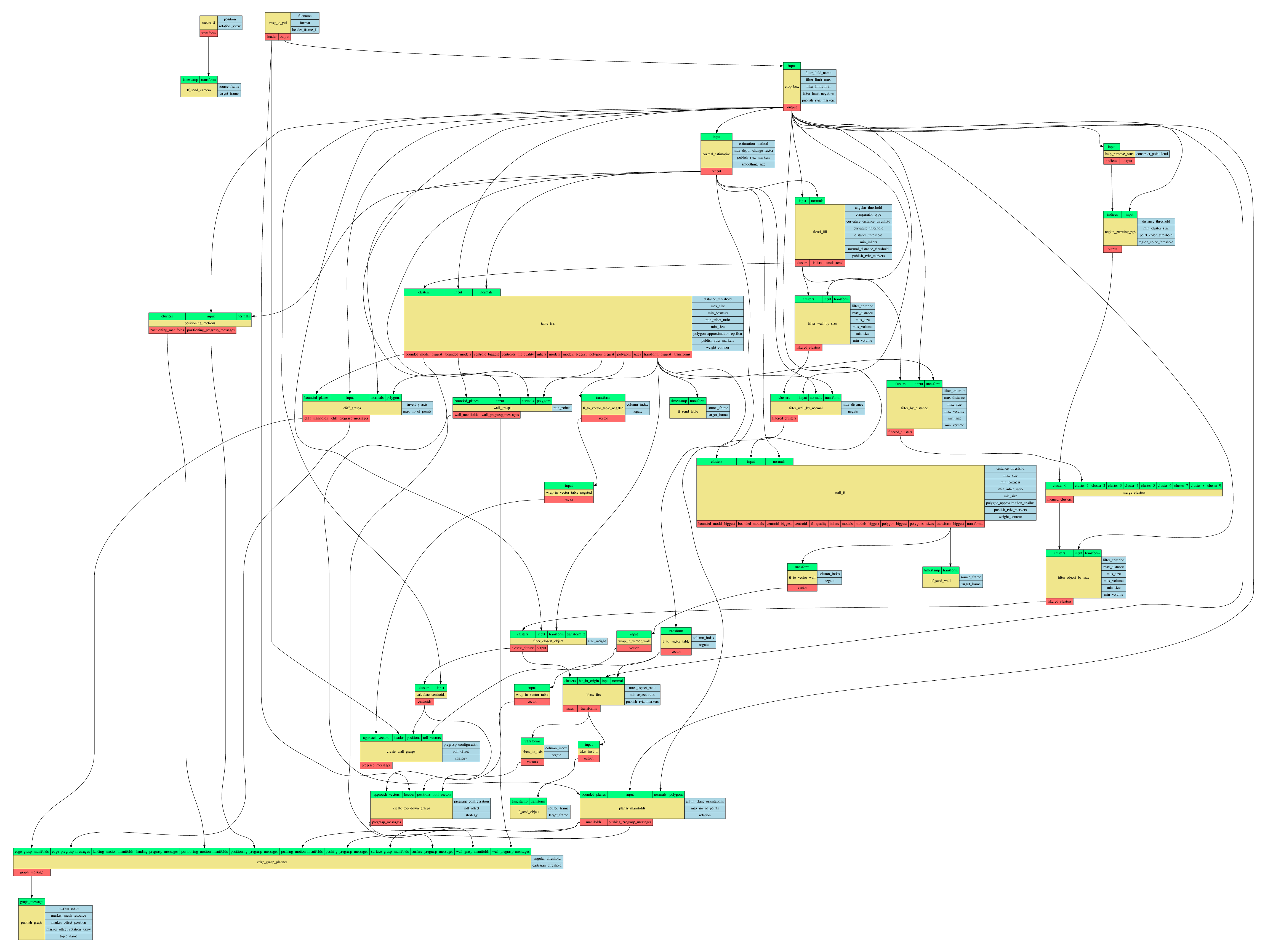

It consists of a visual processing component and a planning module. The visual processing component detects planar surfaces, convex, and concave edges in a point cloud and represents them in a graph structure. This component is based on Ecto, a computation graph framework for C++/Python. The computations are organized as a directed acyclic graph of computing cells connected by typed edges. Single computing cells do simple operations such as clustering, segmentation, or fitting models. The following diagram shows the computation graph for the grasp planning framework:

Here, a segmentation soup is generated by different segmentation algorithms based on depth and color. For these segments, planes and edges are fitted. The final output is a geometry graph that describes the spatial structure of the environment.

The planning module takes this spatial graph as input and combines it with information about the object pose and the type of robotic hand and arm into a planning problem. This planning problem is represented in a STRIPS-like fashion and solved using A* search. The output of the planner is a sequence of motions interspersed with contact sensor events.

Summing up, the input to the planning framework is given by:

- Point Cloud: This can be provided either by a real RGB-D sensor (see Example 2), a recorded point cloud (see Example 1), or even by a simulated sensor (see Example 3).

- Object Pose: This is optional and can also be provided by the Ecto graph computation using a simple heuristic: select the point cluster that is closest to the largest planar surface in the scene.

- Hand and Robot-specific Information: This defines how a particular hand slides across a surface, closes its fingers etc. It also includes robot-specific things such as f/t sensor thresholds or velocities. For new hands and/or arms this can be easily extended.

The usual output of a robot motion planner are joint-configuration trajectories. This planner is different. It outputs so-called hybrid automata. A hybrid automaton is a finite state machine whose states are continuous feedback controllers (based on position, velocity, force, etc.) and transitions are discrete sensor events. This is because position trajectories lack the expressive power that is needed to capture the feedback-driven contact-rich motions considered here. Hybrid automata are much more suited in this context. As a consequence any entity that wants to execute the generated plans needs to be capable of interpreting those hybrid automata descriptions. We use a C++ library that allows serialization/desirialization and can be used to wrap robot-specific interfaces as shown in Example 3.

List of primitives: Positioning, sliding, Caging, EdgeGrasp, WallGrasp, SurfaceGrasp The primitives are based on Clemens Eppner and Oliver Brock. "Planning Grasp Strategies That Exploit Environmental Constraints"

List of controllers: joint controller, operational space controller, sliding controller, RBO-hand controller, Pisa-IIT-hand controller

List of jump conditions: time based, F/T measurement based, joint configuration based, frame pose based

List of ECs: Surface, Edge, Wall

This table lists the tested hardware dependencies of the planner by SoMa partner:

T: tested

S: tested in simulation (gazebo)

F: failed

| TUB | UNIPI | IIT | Ocado | Disney | |

|---|---|---|---|---|---|

| Hand | RBO Hand2 (T) Pisa IIT Hand | RBO Hand2 (T) Pisa/IIT Hand RBO Hand2 v2 (T) Pisa/IIT Hand v2 Pisa/IIT Softgripper |

|||

| Arms | WAM (T) KUKA iiwa (S) | KUKA LBR iiwa14 (T) Staubli RX160L |

|||

| Force-Torque Sensor | ATI FTN-Gamma Sensors (T) | Optoforce HEX-70-XE-200N (T) | |||

| RGB-D Sensor | ASUS Xtion Pro Live (T) | Primesense Carmine 1.08/9(T) Kinect v2 |

|||

| API | ROS/MoveIt | ||||

This code was tested with ROS indigo under Ubuntu 14.04.5 (LTS). To follow our build instructions you need to build with catkin tools (apt-get install python-catkin-tools)

- Clone this repository

git clone https://github.com/SoMa-Project/ec_grasp_planner.git

- Build the geometry messages (don't build any other projects form this repository yet)

catkin build geometry_graph_msgs

- Clone the ROS stack ecto_rbo in your catkin workspace and build it:

git clone https://github.com/SoMa-Project/vision.git

and follow the instructions on https://github.com/SoMa-Project/vision/blob/master/README.md

- Get PyDDL:

pip install -e git+https://github.com/garydoranjr/pyddl.git#egg=pyddl

- Get the ROS package hybrid_automaton_msgs:

git clone https://github.com/tu-rbo/hybrid_automaton_msgs.git

- Get the ROS package hybrid_automaton_manager_kuka if the Kuka interface is needed:

git clone https://github.com/SoMa-Project/hybrid_automaton_manager_kuka.git

- Get Gazebo multi-robot simulator, version 2.2.6:

sudo apt-get install ros-indigo-gazebo-*

- Get iiwa_stack:

git clone https://github.com/SalvoVirga/iiwa_stack.git

cd iiwa_stack

git checkout 94670d70b9bfbf0920c7de539012c805734fdbc5

catkin build iiwa

-

Get hybrid_automaton_library and install it by following the readme instructions.

-

Build hybrid_automaton_manager_kuka according to the instructions (do not forget to link the robot files).

Now, you can clone this repository into your catkin workspace and build the ROS package:

git clone https://github.com/SoMa-Project/ec_grasp_planner.git

cd ec_grasp_planner

git submodule init

git submodule update

catkin build ec_grasp_planner

planner.py [-h] [--ros_service_call] [--file_output]

[--rviz][--robot_base_frame ROBOT_BASE_FRAME]

[--object_frame OBJECT_FRAME] [--object_params_file]

Find path in graph and turn it into a hybrid automaton.

optional arguments:

-h, --help show this help message and exit

--ros_service_call Whether to send the hybrid automaton to a ROS service

called /update_hybrid_automaton. (default: False)

--file_output Whether to write the hybrid automaton to a file called

hybrid_automaton.xml. (default: False)

--rviz Whether to send marker messages that can be seen in

RViz and represent the chosen grasping motion.

(default: False)

--robot_base_frame ROBOT_BASE_FRAME

Name of the robot base frame. (default: base_link)

--object_frame OBJECT_FRAME

Name of the object frame. (default: object)

--object_params_file

Name of the file containing parameters for object-EC selection when multiple objects are present

(default: object_param.yaml)

This will start the planner node which then waits for a service call.

Step 1: start the planner in the background in simulation:

rosrun ec_grasp_planner planner.py --rviz --file_output --robot_base_frame world

for the real world demo in the RBO lab use instead:

rosrun ec_grasp_planner planner.py --rviz --file_output

Step 2, call the rosservice

rosservice call /run_grasp_planner "object_type: 'Apple' grasp_type: 'SurfaceGrasp' handarm_type: 'RBOHand2Kuka' object_heuristic_function: Random"

object_type can be specified to do certain object-specific behaviours. Right now there is only a default behaviour which is the same for all objects.

grasp_type should be one of {Any,EdgeGrasp,WallGrasp,SurfaceGrasp}. In this version only SurfaceGrasp and WallGrasp is supported.

handarm_type should match your specific robot/hand combination, i.e. RBOHand2Kuka for the rbo hand mounted omn the KUKA iiwa. The value must match one of the class names in handarm_parameters.py.

object_heuristic_function should be one of {Random, Deterministic, Probabilistic}. This parameter selects one of the three heuristic functions for multi-object and multi-EC selection. The planner assumes that all EC are exploitable for all objects. The multi-object-EC heuristics are:

- Random: select one object-EC-pair randomly, independent of heuristic values

- Deterministic: pick the maximum of a heuristic function:

(object,ec) = argmax q(object_i, ec_j) i in 1..n & j in 1..m.q()is taken from a parameter filemulti_object_params.pywhich contains probability values for given objects (use-case relevant) and strategies (Surface-, Wall-, EdgeGrasp). The default parameter file is in./data/object_param.yaml. - Probabilistic: Use the heuristic function as a prior for sampling random strategies: samples from the pdf given by the

Q[n x m]matrix, whereQ[i,j] = q(object_i, ec_j)

apple:

SurfaceGrasp: {'success': 1} # Success rate for Surface grasping an apple is 100%

WallGrasp: {'success': 1} # Success rate for Wall grasping an apple is 100%

EdgeGrasp: {'success': 0} # Success rate for Edge grasping an apple is 0%

Advanced object-ec relational parameter definition:

cucumber:

SurfaceGrasp: {'success': 1, 'min': [-0.14, -0.1], 'max': [0.14, 0.05]}

WallGrasp: {'success': [1, 0.8, 0.7, 0] , 'angle': [0, 180, 360], 'epsilon': 20}

EdgeGrasp: {'success': 0}

Objcet-IFCO relative position

Here the SurfaceGrasp strategy success is as given (in this case 1) if the object is within a certain area within the IFCO. The min and max aprameters defin a cropbox inside the IFCO, this cropbox helps to exclude grasp that are infeasable due to possible collision or work space limitation. The reference frame is the IFOC Frame and the min vecor is min: [min_x_distance_x, min_y_distance] for which we compare the object frame relative to IFCO frame such that object_x > min_x_distance && object_y > min_y_distance. Similarly done for the max: [max_x_distance_x, max_y_distance] parameter. If the object is not within the cropbox, the success is 0.

Object-EC relative oriantation

Here the WallGrasp strategy success depend on the relative orientation of the cucumber to the wall. We define a set of possible grasping angles in degrees [0, 180, 360] and a success rate [1, 0.8, 0.7] for each angle. Important: the last element in the success rate vector gives the success in other cases. epsilon is an upper and lower bound on how exact orientation should be (0 - as precises as given in the angle vector, 10 - 10 deg > |current orientation - reference| )

This example shows a planned grasp in RViz based on a PCD file that contains a single colored point cloud of a table-top scene with a banana placed in the middle.

roscore

# if you want to change which pcd to read, change the file name in the ecto graph yaml

rosrun ecto_rbo_yaml plasm_yaml_ros_node.py `rospack find ec_grasp_planner`/data/geometry_graph_example1.yaml --debug

# start visualization

rosrun rviz rviz -d `rospack find ec_grasp_planner`/configs/ec_grasps_example1.rviz

# select which type of grasp you want

rosrun ec_grasp_planner planner.py --rviz --robot_base_frame camera_rgb_optical_frame

# execute grasp

rosservice call /run_grasp_planner "object_type: 'Apple' grasp_type: 'SurfaceGrasp' handarm_type: 'RBOHand2Kuka'"

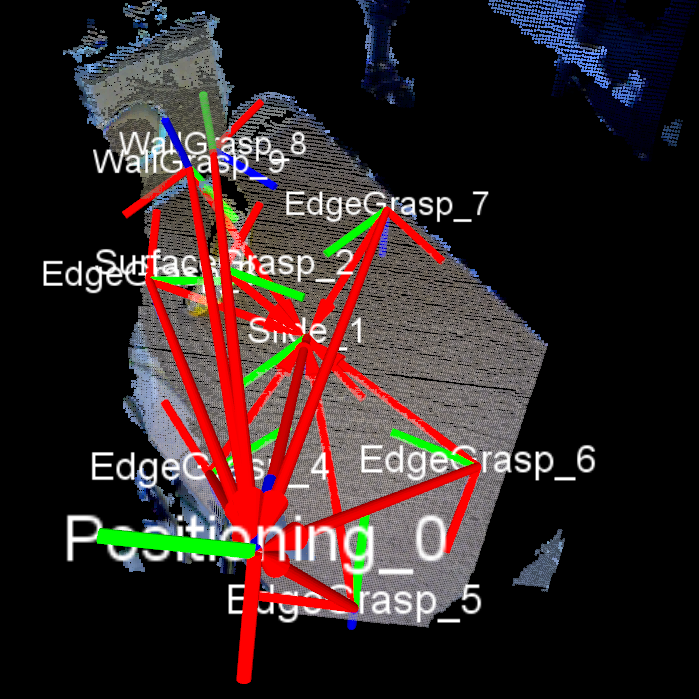

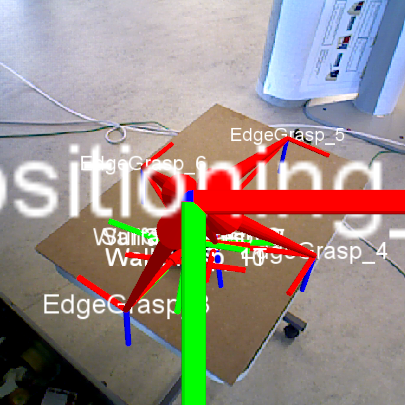

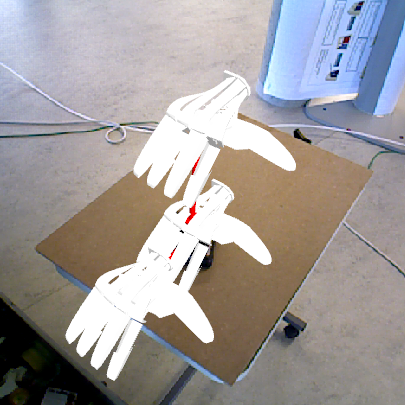

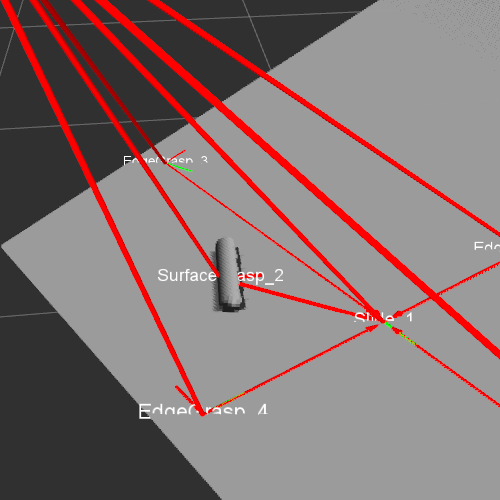

In RViz you should be able to see the geometry graph and the wall grasp published as visualization_msgs/MarkerArray under the topic names geometry_graph_marker and planned_grasp_path:

This example shows how to use the planner with an RGB-Depth sensor like Kinect or Asus Xtion. It uses the camera drivers provided in ROS:

# plug the camera into your computer

roslaunch openni2_launch openni2.launch depth_registration:=true

# set camera resolution to QVGA

rosrun dynamic_reconfigure dynparam set /camera/driver ir_mode 7

rosrun dynamic_reconfigure dynparam set /camera/driver color_mode 7

rosrun dynamic_reconfigure dynparam set /camera/driver depth_mode 7

rosrun ecto_rbo_yaml plasm_yaml_ros_node.py `rospack find ec_grasp_planner`/data/geometry_graph_example2.yaml --debug

# start visualization

rosrun rviz rviz -d `rospack find ec_grasp_planner`/configs/ec_grasps_example2.rviz

# select an edge grasp and visualize the result in RViz

rosrun ec_grasp_planner planner.py --robot_base_frame camera_rgb_optical_frame --rviz

# execute grasp

rosservice call /run_grasp_planner "object_type: 'Punnet' grasp_type: 'SurfaceGrasp' handarm_type: 'RBOHand2Kuka'"

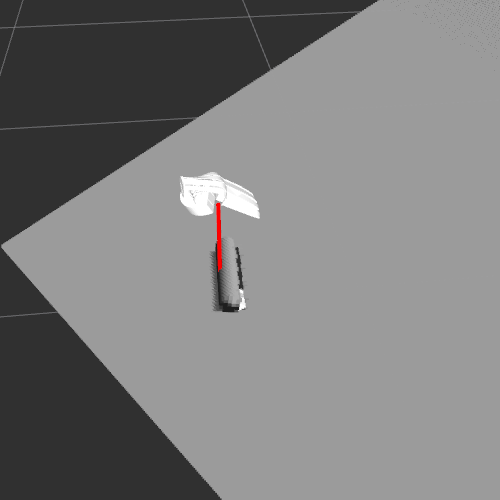

Depending on your input the result in RViz could look like this:

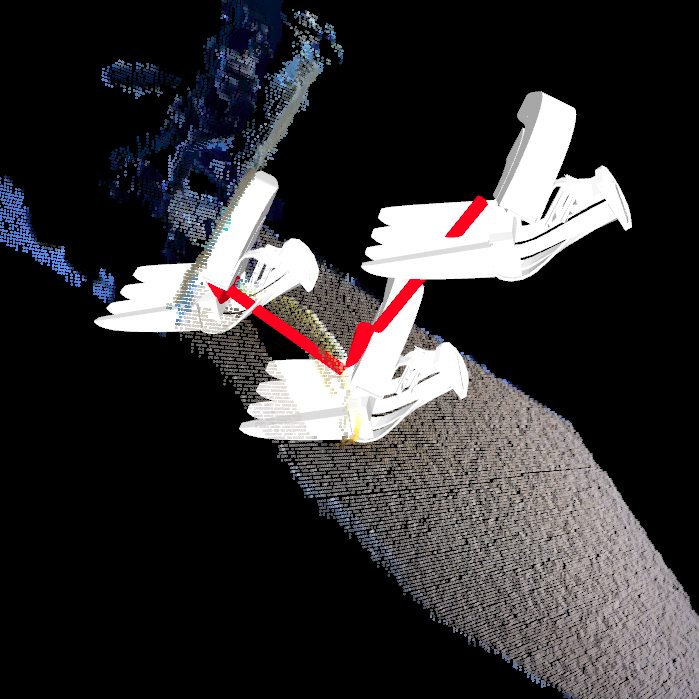

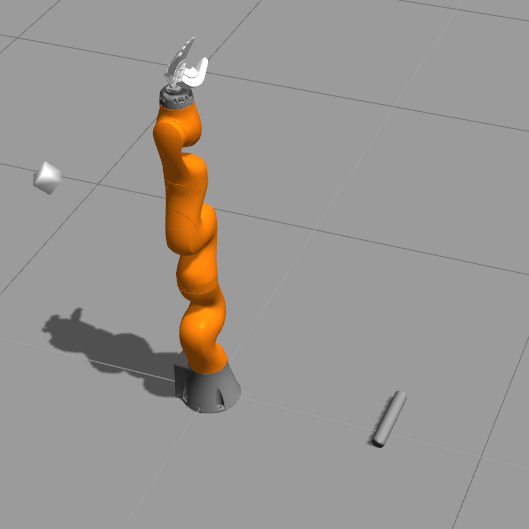

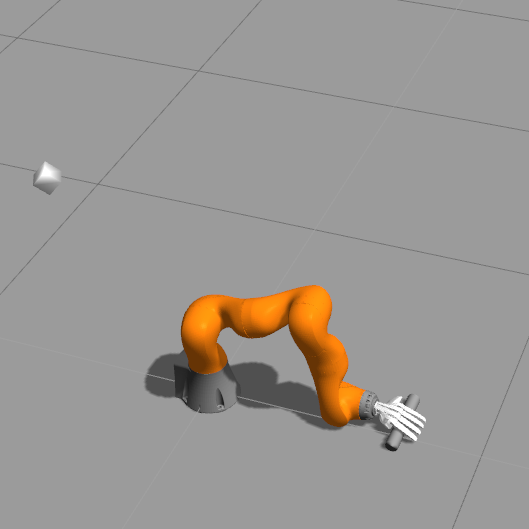

This example shows the execution of a planned hybrid automaton motion in the Gazebo simulator.

# Step 1: make sure the simulation time is used

roslaunch hybrid_automaton_manager_kuka launchGazebo.launch

# Step 2: start the simulation environment and kuka control manager

rosrun hybrid_automaton_manager_kuka hybrid_automaton_manager_kuka

# Step 3: run vision code

rosrun ecto_rbo_yaml plasm_yaml_ros_node.py `rospack find ec_grasp_planner`/data/geometry_graph_example3.yaml --debug

# Step 4 (optional): to check potential grasps

rosrun rviz rviz -d `rospack find ec_grasp_planner`/configs/ec_grasps.rviz

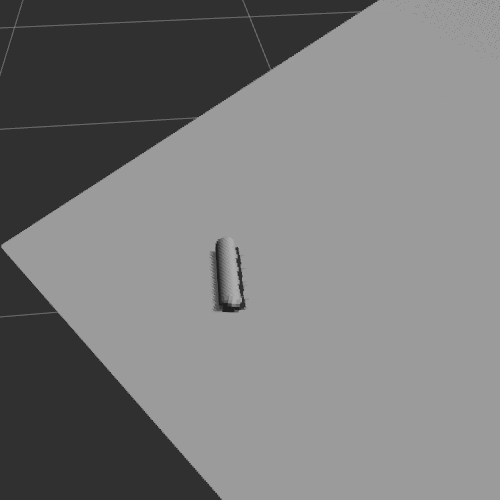

In RViz you should be able to see the point cloud simulated in Gazebo and the geometry graph published as visualization_msgs/MarkerArray under the topic name geometry_graph_marker:

# Step 5: select a surface grasp, visualize and execute it

roscd hybrid_automaton_manager_kuka/test_xmls/

rosrun ec_grasp_planner planner.py --grasp SurfaceGrasp --ros_service_call --rviz --handarm RBOHand2Kuka [need to ctrl-c once done]

./ha_send_xml.sh hybrid_automaton.xml

In RViz you should be able to see the planned surface grasp and in Gazebo the robot moves its hand towards the cylinder until contact (https://youtu.be/Q91U9r83Vl0):