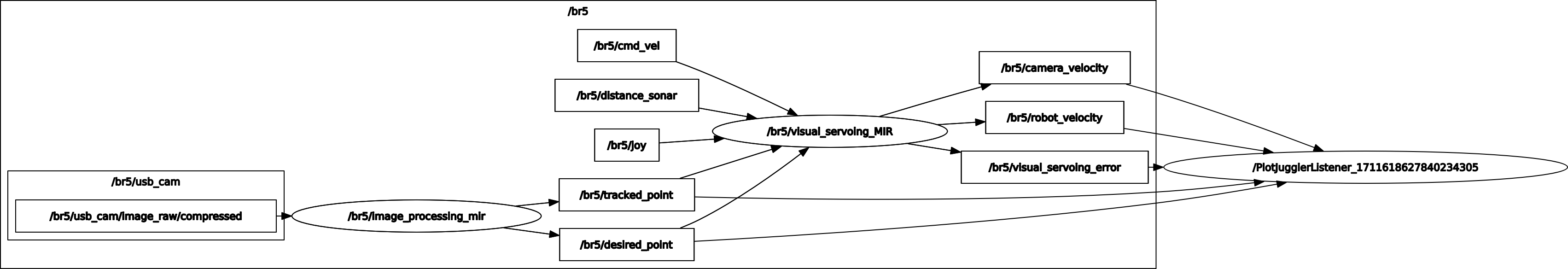

The objective is to implement visual servoing using OpenCV by detecting an orange buoy within an underwater environment. Subsequently, we aim to implement a Proportional (P) controller using an interaction matrix to regulate various degrees of freedom of a BlueROV. Initially, the controller focuses on regulating yaw-heave, and then sway-heave. Ultimately, the controller aims to oversee all five degrees of motion, except for pitch.

Group Members:

-

Prequisites:

Linux 20.04 or 18.04 LTS

ROS: Melodic for ubuntu 18.04, Neotic for 20.04

Installation of mavros locally.

It also possible to install mavros packages from the network but some code may not be compatible any more with the embbedded version.

-

Cloning the Project Repository :

git@github.com:abhimanyubhowmik/Visual_Servoing.git

sudo apt-get install ros-melodic-joy

cd bluerov_ws

catkin build

catkin build -j1 -v

# Image processing

source devel/setup.bash

roslaunch autonomous_rov run_image_processing.launch

# Visual servoing

source devel/setup.bash

roslaunch autonomous_rov run_visual_servoing.launch

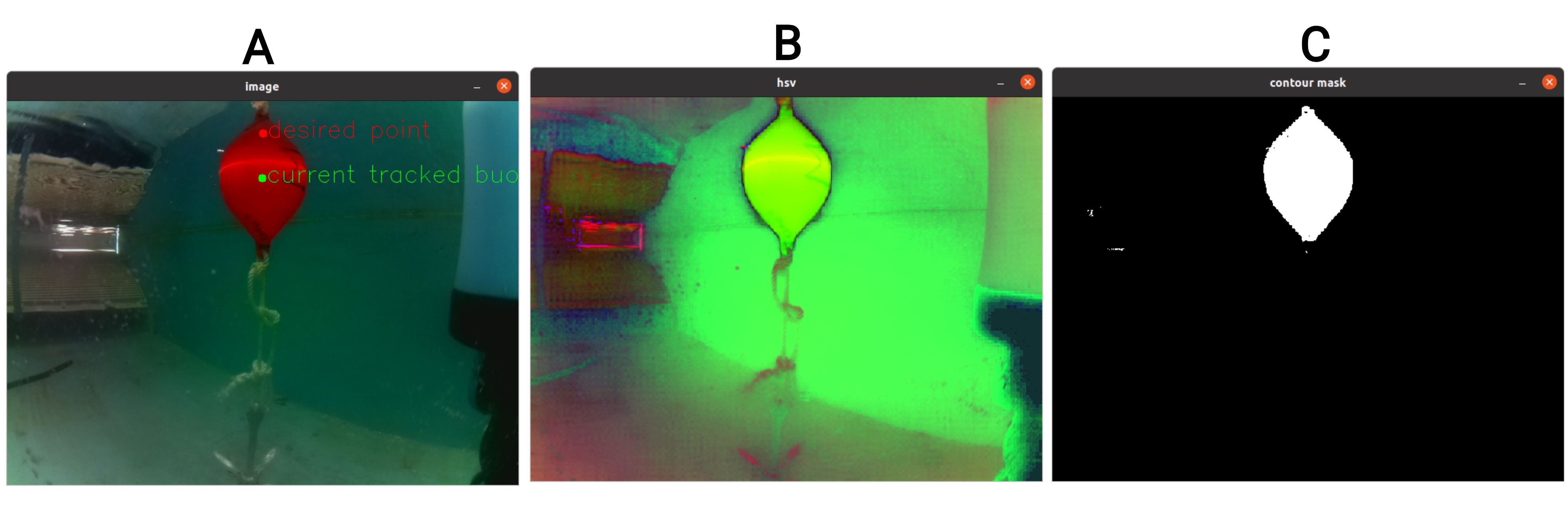

The input camera image is converted into HSV (Hue,

Saturation, Value) colour space using cv2.cvtColor(). The resultant image can be seen in Figure

1(B)

Figure 1: (A) RGB Image with track point and desired point overlay; (B) Image in HSV format; (C) Contour mask for the buoy

After converting to HSV, a colour mask is applied to isolate the buoy's colour (assumed to be red/orange).

The cv2.inRange() function creates a binary mask where pixels within the specified HSV range(all orange pixels) are set to white(255), and others are set to zero (black). Considering our camera configurations, we took the lower range of red colour in HSV as cv2.findContours().Contours are simply the boundaries of white regions in the binary image.

Finally, the centroid of the largest contour is computed to determine the buoy's current position in the image.The code identifies the largest contour in the binary mask, which is assumed to correspond to the buoy.

The centroid (centre of mass) of this contour is computed using the moments of the contour cv2.moments().

The centroid coordinates (

The interaction matrix (L) is the relation between the camera motion and the change in the observed feature point in the image plane. In simple words, it is the Jacobian between the current and desired position. For this project, we performed one feature point tracking, so the dimensionality would be 2x6.

Here,

Here,

We subtract

All the 3 above-discussed methods in section 2 to predict the interaction matrix are implemented here. However, we got the best results with the 3rd option,i.e.,

Where:

-

$u(t)$ is the control signal at time$t$ , -

$e(t)$ is the error between the desired setpoint and the current state at time$t$ , and -

$\lambda$ is the proportional gain, which determines the sensitivity of the controller's response to changes in the error.

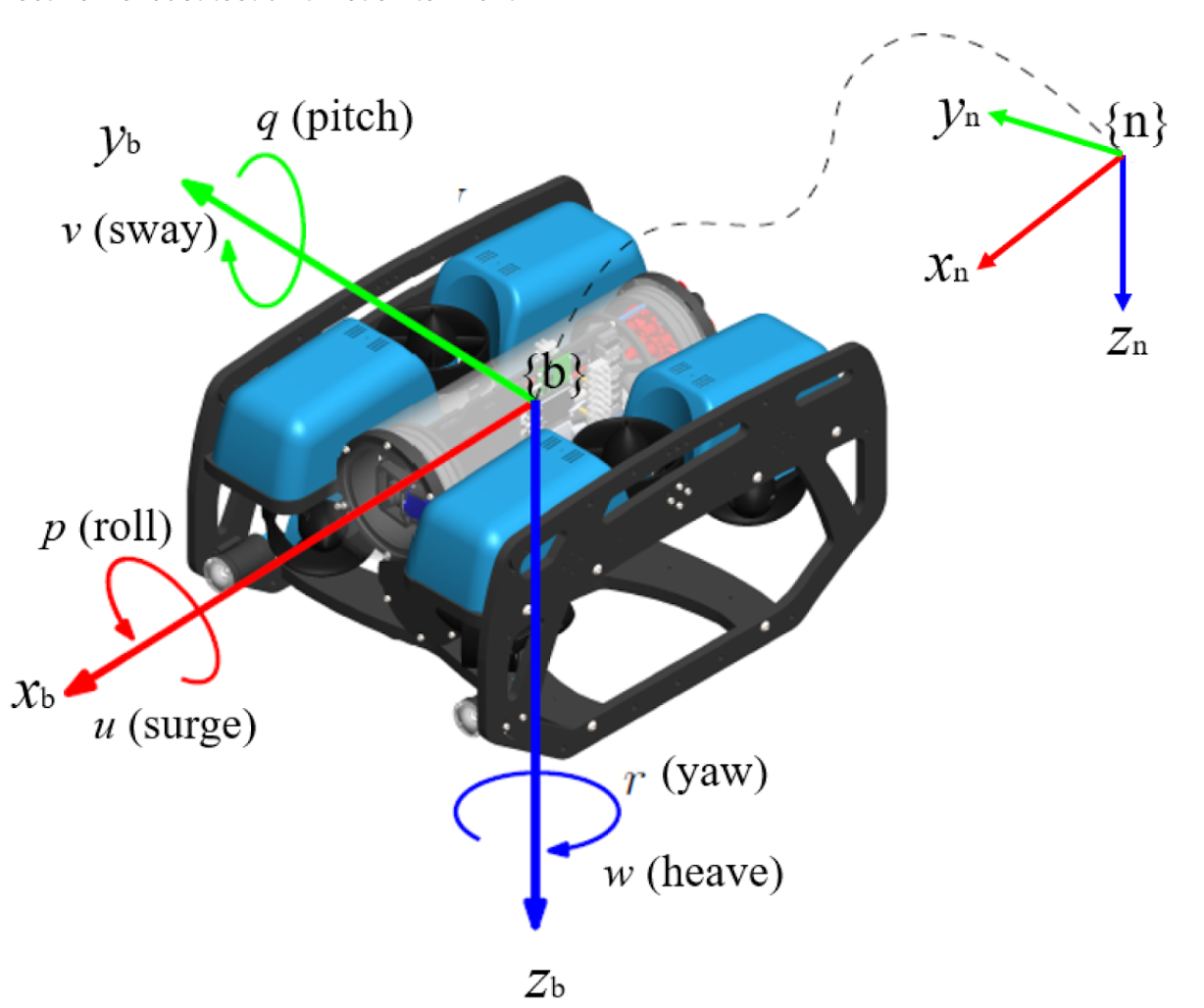

The computed velocities have to be converted to the body frame from the camera frame with the first frame as the camera frame, in order to get a meaningful and accurate control action. To achieve this, we apply a transformation matrix.

where

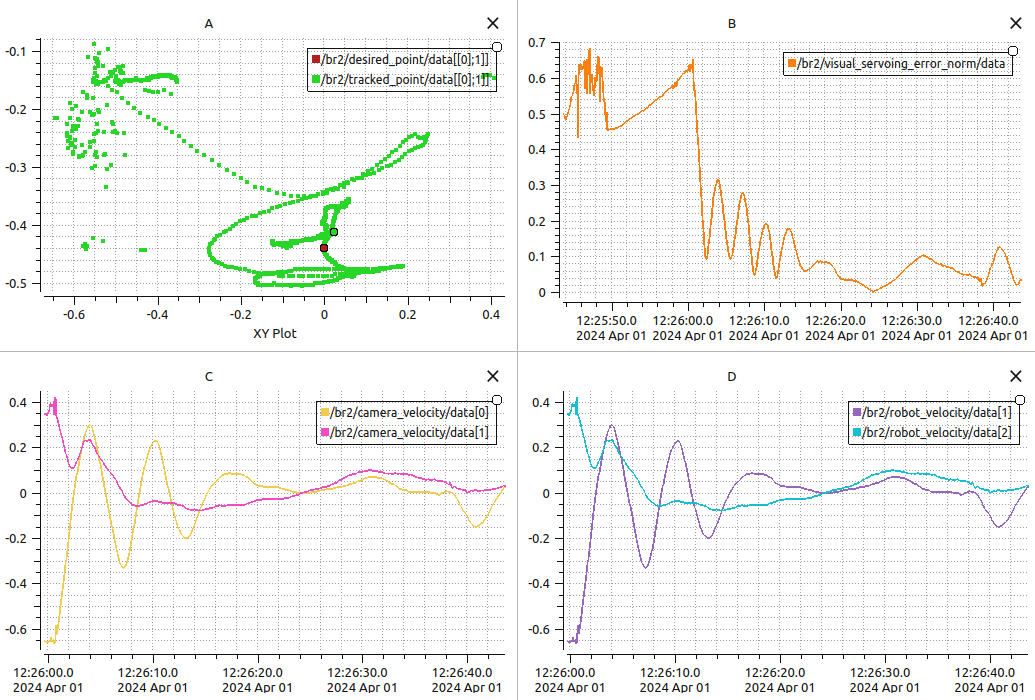

After testing the image processing on bag files, we tested the controller on the real tank. We tested for yaw and heave with a lambda(

Graphs (C) and (D) show the camera velocity and robot velocity respectively. The publishing of these topics starts after the controller is initialised in the ROV. Thus, the plot starts at a different time frame than the rest. In Graph(C),

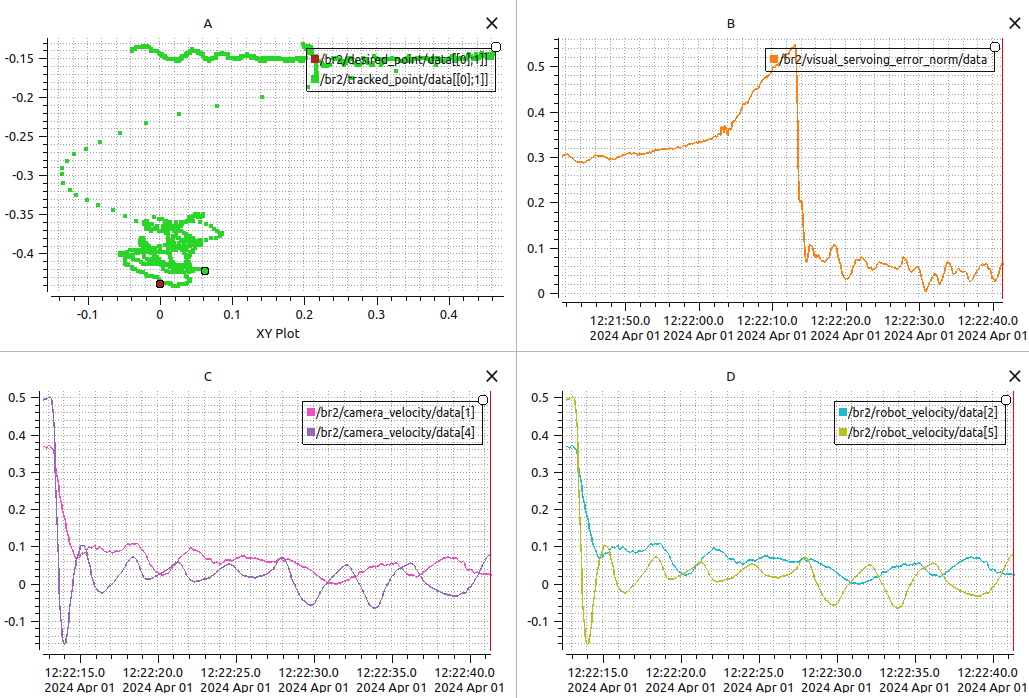

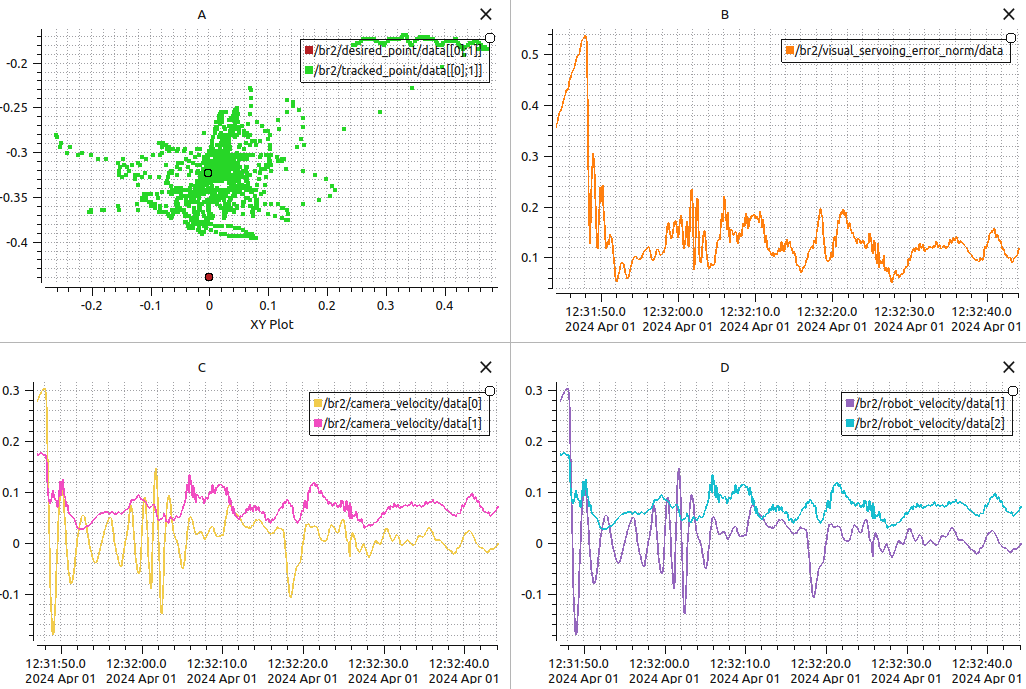

Figure 5 consists of 4 graphs for sway and heave with a lambda(

Graph (B) is the L2-norm of the error. Here, we observe that after initial oscillations, the error converges to almost zero. To check the robustness of the controller, we moved the buoy externally, resulting in the subsequent error "bumps" in the graph. Graphs (C) and (D) illustrate the x and y velocities of the camera frame and the y and z velocities of the robot frame resulting in sway and heave.

Figure 6 demonstrates the graphs for the 5-degree controller with a lambda(