https://arxiv.org/abs/2001.01921 (ICRA 2020)

https://youtube.com/watch?v=K78NZbtKYVM (ICRA 2020 Video Presentation)

https://ieeexplore.ieee.org/document/9477208 (IEEE TCYB 2021 - WaSR2 extension)

https://github.com/lojzezust/WaSR (Pytorch Reimplementation of WaSR network)

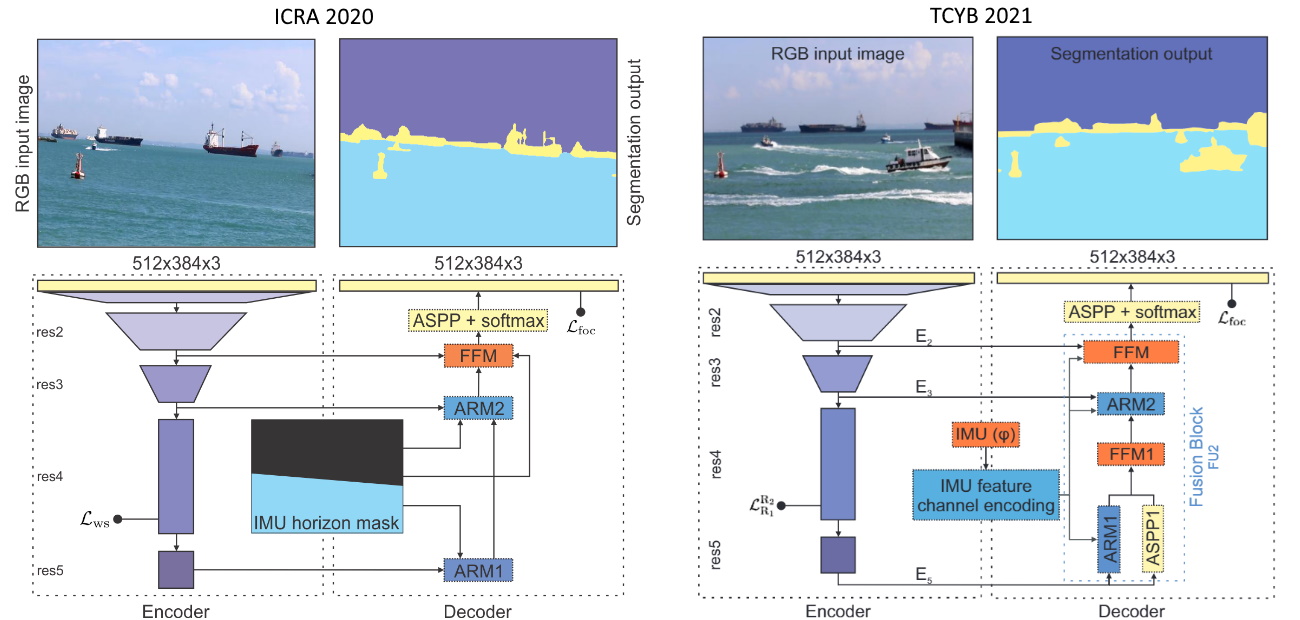

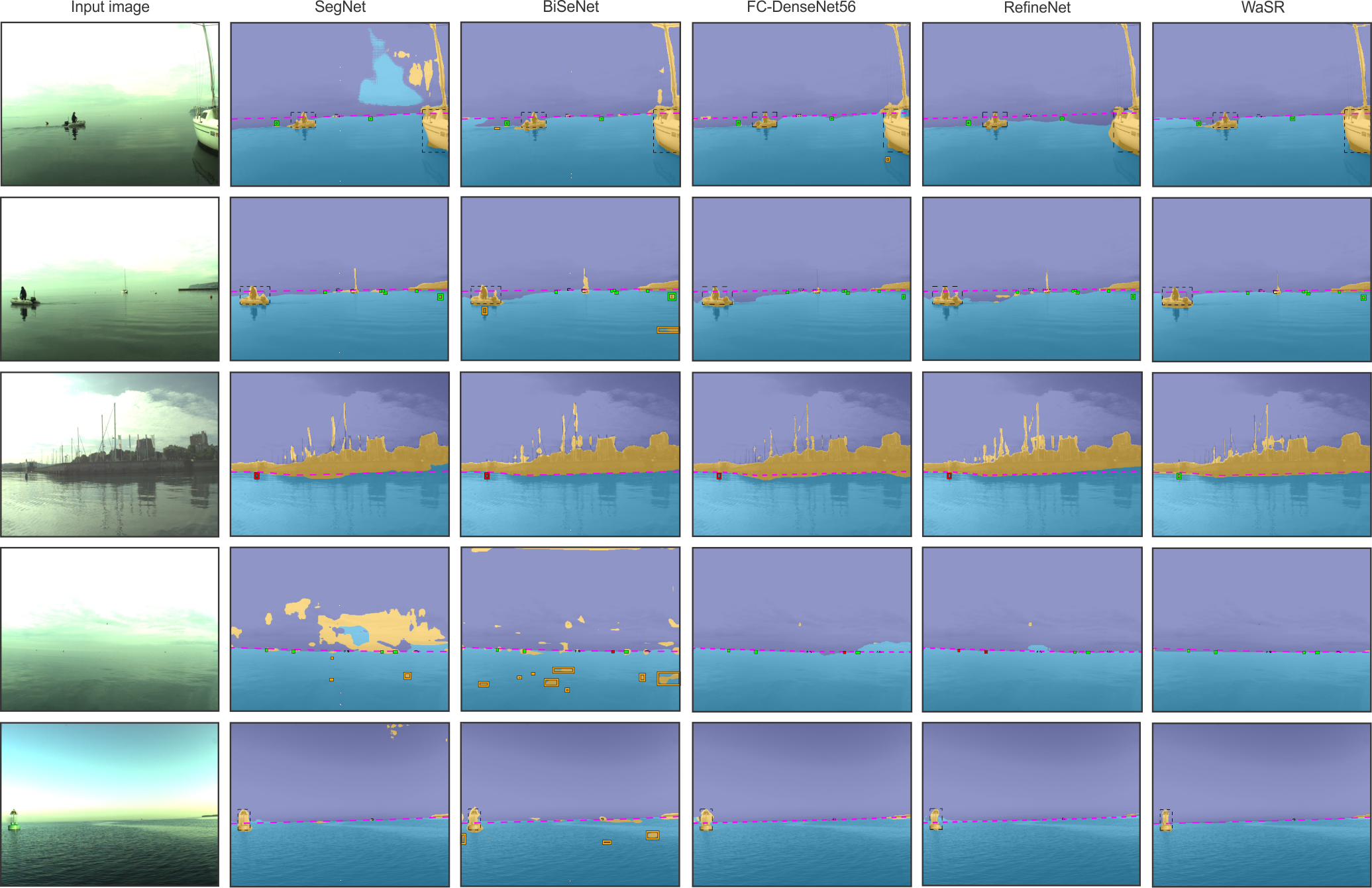

Obstacle detection using semantic segmentation has become an established approach in autonomous vehicles. However, existing segmentation methods, primarily developed for ground vehicles, are inadequate in an aquatic environment as they produce many false positive (FP) detections in the presence of water reflections and wakes. We propose a novel deep encoder-decoder architecture, a water segmentation and refinement (WaSR) network, specifically designed for the marine environment to address these issues. A deep encoder based on ResNet101 with atrous convolutions enables the extraction of rich visual features, while a novel decoder gradually fuses them with inertial information from the inertial measurement unit (IMU). The inertial information greatly improves the segmentation accuracy of the water component in the presence of visual ambiguities, such as fog on the horizon. Furthermore, a novel loss function for semantic separation is proposed to enforce the separation of different semantic components to increase the robustness of the segmentation. We investigate different loss variants and observe a significant reduction in false positives and an increase in true positives (TP). Experimental results show that WaSR outperforms the current state-of-the-art by approximately 4% in F1-score on a challenging USV dataset. WaSR shows remarkable generalization capabilities and outperforms the state of the art by over 24% in F1 score on a strict domain generalization experiment.

Updates:

- [August 2021] Pytorch reimplementation made available

- [July 2021] Added ICRA2020 Presentation Video

- [June 2021] Added IMU version and pretrained weights (as seen in the TCYB paper)

- [March 2020] Thomas Clunie ported WaSR to Python3 and Tensorflow 1.15.2

- [February 2020] Initial commit

To-Do:

- Port the IMU variation fully to Python

- Upload requirements.txt file for quick installation

- Re-upload weights

- Update the read-me file

To successfully run WaSR you will need the following packages:

- Python >= 2.7.14

- OpenCV >= 3.4

- Tensorflow >= 1.2.0 (GPU) / >= 1.4.1 (CPU)

- MatPlotLib

- Numpy

Execute the following sequence of commands to download and install required packages and libraries (Ubuntu):

$ sudo apt-get update

$ sudo apt-get install python2.7

$ sudo apt-get install python-opencv

$ pip install -r requirements.txt

The WaSR architecture consists of a contracting path (encoder) and an expansive path (decoder). The purpose of the encoder is construction of deep rich features, while the primary task of the decoder is fusion of inertial and visual information, increasing the spatial resolution and producing the segmentation output.

Following the recent analysis [1] of deep networks on a maritime segmentation task, we base our encoder on the low-to-mid level backbone parts of DeepLab2 [2], i.e., a ResNet-101 [3] backbone with atrous convolutions. In particular, the model is composed of four residual convolutional blocks (denoted as res2, res3, res4 and res5) combined with max-pooling layers. Hybrid atrous convolutions are added to the last two blocks for increasing the receptive field and encoding a local context information into deep features.

The primary tasks of the decoder is fusion of visual and inertial information. We introduce the inertial information by constructing an IMU feature channel that encodes location of horizon at a pixel level. In particular, camera-IMU projection [4] is used to estimate the horizon line and a binary mask with all pixels below the horizon set to one is constructed. This IMU mask serves a prior probability of water location and for improving the estimated location of the water edge in the output segmentation.

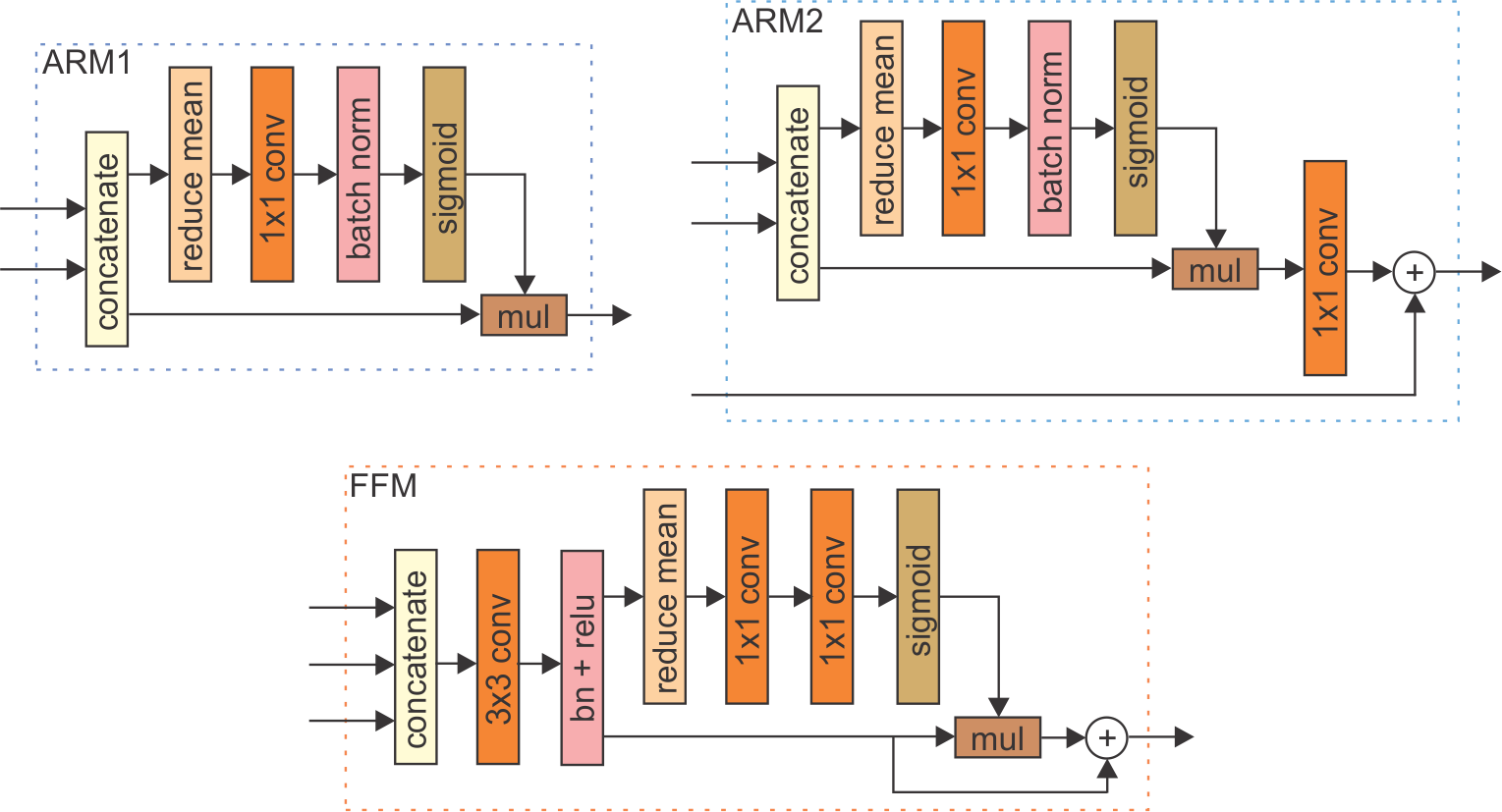

The IMU mask is treated as an externally generated feature channel, which is fused with the encoder features at multiple levels of the decoder. However, the values in the IMU channel and the encoder features are at different scales. To avoid having to manually adjust the fusion weights, we apply approaches called Attention Refinement Modules (ARM) and Feature Fusion Module (FFM) proposed by [5] to learn an optimal fusion strategy.

The final block of the decoder is Atrous Spatial Pyramid Pooling (ASPP) module [2], followed by a softmax which improve the segmentation of small structures (such as small buoys) and produces the final segmentation mask.

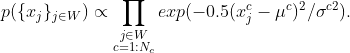

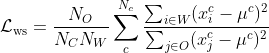

Since we would like to enforce clustering of water features, we can approximate their distribution by a Guassian with per-channel means and variances, where we assume channel independence for computational tractability. Similarity of all other pixels corresponding to obstacles can be measured as a joint probability under this Gaussian, i.e.,

We would like to enforce learning of features that minimize this probability. By expanding the equation for water per-channel standard deviations, taking the log of the above equation, flipping the sign and inverting, we arrive at the following equivalent obstacle-water separation loss

To train the network from scratch (or from some pretrained weights) use scripts wasr_train_noimu.py for the NO-IMU variation or wasr_train_imu.py for the IMU variation. Both scripts expect the same input arguments. When fine-tunning the network make sure to freeze the pretrained parameters for initial n iterations and train only the last layer.

batch-size- number of images sent to the network in one stepdata-dir- path to the directory containing the MODD2 datasetdata-list- path to the file listing the images in the datasetgrad-update-every- number of steps after which gradient update is appliedignore-label- the value of the label to ignore during the traininginput-size- comma-separated string with height and width of images (default: 384,512)is-training- whether to update the running means and variances during the traininglearning-rate- base learning rate for training with polynomial decaymomentum- moment component of the optimisernot-restore-last- whether to no restore last layers (when using weights from pretrained encoder network)num-classes- number of classes to predictnum-steps- number of training steps (this are not epoch!)power- decay parameter to compute the learning raterestore-from- where restore model parameters fromsnapshot-dir- where to save snapshots of the modelweight-decay- regularisation parameter for L2-loss

WaSR NO-IMU variant- weights are available for download hereWaSR IMU variant- weights are available for download here

To perform the inference on a specific single image use scripts wasr_inference_noimu_general.py for the WaSR NO-IMU variant or wasr_inference_imu_general.py for the WaSR IMU variant. Both scripts expect the same input arguments and can be run on images from arbitrary maritime dataset.

dataset-path- path to MODD2 dataset files on which inference is performedmodel-weights- path to the file with model weightsnum-classes- number of classes to predictsave-dir- where to save predicted maskimg-path- path to the image on which we want to run inference

Example usage:

python wasr_inference_noimu_general.py --img-path example_1.jpg

The above command will take image example_1.jpg from folder test_images/ and segment it. The segmentation result will be saved in the output/ folder by default.

| Example input image | Example segmentation output |

|  |

To run the inference on the MODD2 dataset use the provided bash scripts wasr_inferences_noimu.sh for the WaSR NO-IMU variant or wasr_inferences_imu.sh for the WaSR IMU variant. Bash scripts will run corresponding Python codes (wasr_inference_noimu.py and wasr_inference_imu.py).

dataset-path- path to MODD2 dataset files on which inference is performedmodel-weights- path to the file with model weightsnum-classes- number of classes to predictsave-dir- where to save predicted maskseq- sequence number to evaluateseq-txt- path to the file listing the images in the sequence

Please cite our WaSR paper(s) if you use the provided code:

@inproceedings{bovcon2020water,

title={A water-obstacle separation and refinement network for unmanned surface vehicles},

author={Bovcon, Borja and Kristan, Matej},

booktitle={2020 IEEE International Conference on Robotics and Automation (ICRA)},

pages={9470--9476},

year={2020},

organization={IEEE}

}

@article{bovconwasr,

title={WaSR--A Water Segmentation and Refinement Maritime Obstacle Detection Network},

author={Bovcon, Borja and Kristan, Matej},

journal={IEEE transactions on cybernetics}

}

[1] Bovcon et. al, The MaSTr1325 Dataset for Training Deep USV Obstacle Detection Models, IROS 2019

[2] Chen et. al, Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs, TPAMI 2018

[3] He et. al, Deep residual learning for image recognition, CVPR 2016

[4] Bovcon et. al, Stereo Obstacle Detection for Unmanned Surface Vehicles by IMU-assisted Semantic Segmentation, RAS 2018

[5] Yu et. al, Bisenet: Bilateral segmentation network for real-time semantic segmentation, ECCV 2018