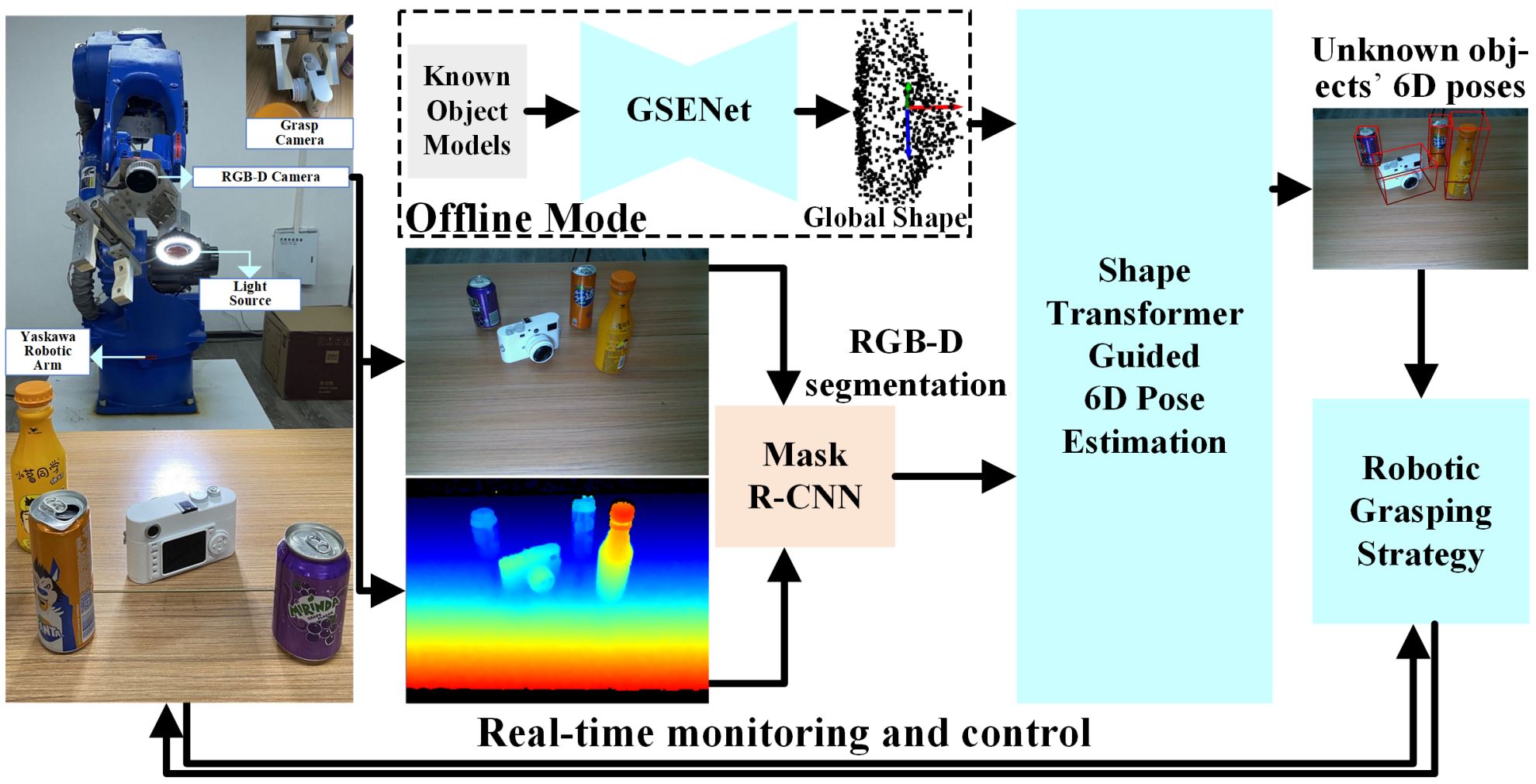

Robotic Continuous Grasping System by Shape Transformer-Guided Multi-Object Category-Level 6D Pose Estimation

This is the PyTorch implementation of paper Robotic Continuous Grasping System by Shape Transformer-Guided Multi-Object Category-Level 6D Pose Estimation published in IEEE Transactions on Industrial Informatics by J. Liu, W. Sun, C. Liu, X. Zhang, and Q. Fu.

https://www.bilibili.com/video/BV16M4y1Q7CD or https://youtu.be/ZeGN6_DChuA

Our code has been tested with

- Ubuntu 20.04

- Python 3.8

- CUDA 11.0

- PyTorch 1.8.0

We recommend using conda to setup the environment.

If you have already installed conda, please use the following commands.

conda create -n CLGrasp python=3.8

conda activate CLGrasp

conda install ...Build PointNet++

cd 6D-CLGrasp/pointnet2/pointnet2

python setup.py installBuild nn_distance

cd 6D-CLGrasp/lib/nn_distance

python setup.py installDownload camera_train, camera_val,

real_train, real_test,

ground-truth annotations,

and mesh models

provided by NOCS.

Unzip and organize these files in 6D-CLGrasp/data as follows:

data

├── CAMERA

│ ├── train

│ └── val

├── Real

│ ├── train

│ └── test

├── gts

│ ├── val

│ └── real_test

└── obj_models

├── train

├── val

├── real_train

└── real_test

Run python scripts to prepare the datasets.

cd 6D-CLGrasp/preprocess

python shape_data.py

python pose_data.py

You can download our pretrained models (camera, real) and put them in the '../train_results/CAMERA' and the '../train_results/REAL' directories, respectively. Then, you can have a quick evaluation on the CAMERA25 and REAL275 datasets using the following command. (BTW, the segmentation results '../results/maskrcnn_results' can be download from SPD)

bash eval.shIn order to train the model, remember to download the complete dataset, organize and preprocess the dataset properly at first.

# optional - train the GSENet and to get the global shapes (the pretrained global shapes can be found in '6D-CLGrasp/assets1')

python train_ae.py

python mean_shape.py

train.py is the main file for training. You can simply start training using the following command.

bash train.shIf you find the code useful, please cite our paper.

@article{TII2023,

author={Liu, Jian and Sun, Wei and Liu, Chongpei and Zhang, Xing and Fu, Qiang},

journal={IEEE Transactions on Industrial Informatics},

title={Robotic Continuous Grasping System by Shape Transformer-Guided Multi-Object Category-Level 6D Pose Estimation},

year={2023},

publisher={IEEE},

doi={10.1109/TII.2023.3244348}

}Our code is developed based on the following repositories. We thank the authors for releasing the codes.

This project is licensed under the terms of the MIT license.