For this project, I took the CAD files from the PLEN robot and turned them into OpenAI Gym environments in Pybullet and Gazebo via URDF. Then, I applied Twin-Delayed Deep Deterministic Policy Gradient (TD3) Reinforcement Learning to learn a gait. Finally, I built the real robot and tested my learned policy. I used OpenAI Gym so you can just plug and play your code!

As the sim-to-real experiment was unsuccessful, I implemented a simple sinewave-based trajectory for the robot's feet and solved the Inverse Kinematics using this paper. Based on this implementation, I learned that the robot needs wider hips, a lower CoM, and higher-torque motors for any RL policy to be deployed. Here's a video of the manual trajectory:

The core project components were:

Pytorchto construct the six deep neural networks required by TD3 Reinforcement Learning.PyBulletfor simulating the robot and environment.RViz and Gazebofor an alternate ROS-based simulation, as well as for constructing and testing the URDF mechanically and dynamically.The Real Robotwas 3D printed and can be controlled using either joint position replay, or by sampling actions from a policy.

To read the theory behind each element of this project, please visit my portfolio website

forkthis repository, then download and build usingwstoolandcatkin_makeif you want to use the Gazebo Environment.- To run the Gazebo training script,

sourceyour workspace, and writeroslaunch plen_ros plen_walk.launch td3:=True - To run the Pybullet training script, simple navigate to

plen_td3.pyunder thesrcdirectory in theplen_bulletpackage, and run. - To evaluate a policy in Pybullet, navigate to the above directory, and run

walk_eval.py. You will need to open the file and edit the policynumberto choose a different one. - To evaluate the manual trajectory in Pybullet, navigate to the above directory and run

trajectory_eval.py. Feel free to go one directory deeper intotrajectory_generator.pyand edit the gait parameters to your liking. - To run a policy or to replay a trajectory on the real robot, you will need to send the

plen_realpackage to the robot's Raspberry Pi. Then, navigate into that package'ssrcdirectory, and runplen_real.pyand follow the instructions on screen. To deploy a policy, you will need to runmain_plen_walk_pc.pyon your computer to send the robot's pose as measured by an overhead camera. Note that you will need to calibrate the camera and IMU following the instructions linked. - Finally, if you would like to use your own RL script on this robot, you can do so by importing

plen_env.pyeither from theplen_bulletorplen_rospackages and use them as you would any other OpenAI Gym Environment.

- ROS Melodic

- Gazebo

- Pytorch

- Pybullet

- Gym

- OpenCV

- Adadfruit Servo Toolkit

- Adafruit Sensor Toolkit

This package holds the Pybullet implementation of the project. The src directory contains the training script, plen_td3.py, the policy evaluation script walk_eval.py, and the manual trajectory evaluation script trajectory_eval.py. Move one directory below, and you will see plen_env.py, which contains the OpenAI Gym and Bullet interface, as well as trajectory_generator.py, a script used to generate sinewave trajectories for the robot's feet, along with corresponding joint angles found using an analytical Inverse Kinematics solver.

This package holds the live implementation of this project. This package should be sent to the robot's Raspberry Pi Zero W, and the plen_real.py script should be run there. The main_walk_pc.py script should be run on the host computer, as it records the robot position using OpenCV in the object_detector.py script, and sends it to the Pi using sockets. The robot's motors are controlled using the servo_model.py script which contains the ServoJoint object with actuation and measurement capabilities. The IMU is controlled using the imu.py script containing the IMU object with measurement and calibration capabilities. Finally, the UDP Client and Server objects are located in the socket_comms.py script.

This was the first package in the project, and for this reason contains the TD3 RL implementation which the other packages borrow from. It also contains the Gazebo equivalent of plen_bullet, but it is not recommended for use unless you have a Quadrupedal robot to simulate, as Gazebo's inability to deterministically step a simulation makes it difficult to train bipedal robots due to the variable amount of time required for training the Agent, which makes realtime training tricky.

This package also contains the urdf files for the robot in xacro format. This needs to be converted to urdf for use in Pybullet, which is why the plen_bullet package contains its own urdf, identical to the one here.

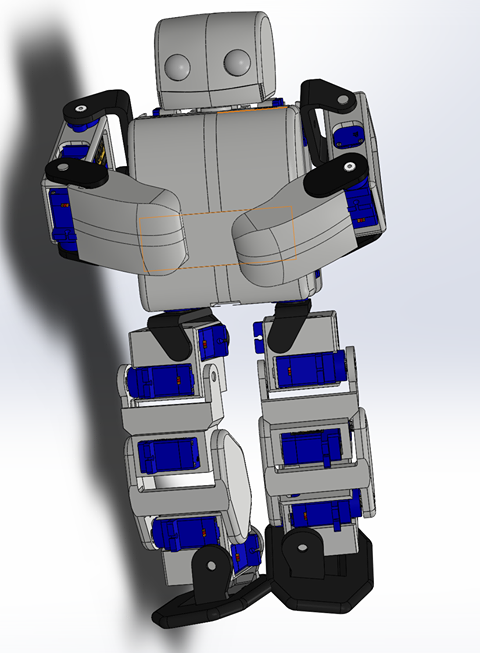

The files presented in the cad directory were modified to fit 9g servo motors, as well as a Raspberry Pi and PWM board instead of the stock components recommended by the PLEN Project Company.

- Raspberry Pi Zero W

- PWM Board

- FS90-FB Servo Motors or TowerPro 9g

- IMU

- 60FPS Camera for pose tracking (sim runs at 60Hz)

- 4.8V Battery

- ADC

- Force Sensing Resistor for Foot Contact

For assembly instructions, please follow this guide by the PLEN Project Company. Feel free to use the servo_calibration_basic.py script to help you align the servo horns. Note that a software offset of 9 degrees was applied to each servo motor (see plen_real.py in plen_real) to zero them properly.

One method of closing the sim-to-real gap is to increase the fidelity of the simulation. This has been done for quadrupedal robots in Pybullet, so it should be possible for PLEN as well. In addition, I noticed that Gazebo more accurately represents contacts, so an alternate or parallel route for improvement would be to implement a plugin to deterministically step the simulation while still applying joint commands.

Finally, while this was not my original goal, I inadvertedly dove into Zero Moment Point trajectory generation for bipedal robots during the final week of this project. Although the topic requires significantly more research for me to implement (hence why I opted for open-loop sinewave-based foot trajectories), it is definitely worthwhile. It would be interested to apply a ZMP-based gait, and use RL to improve upon it.