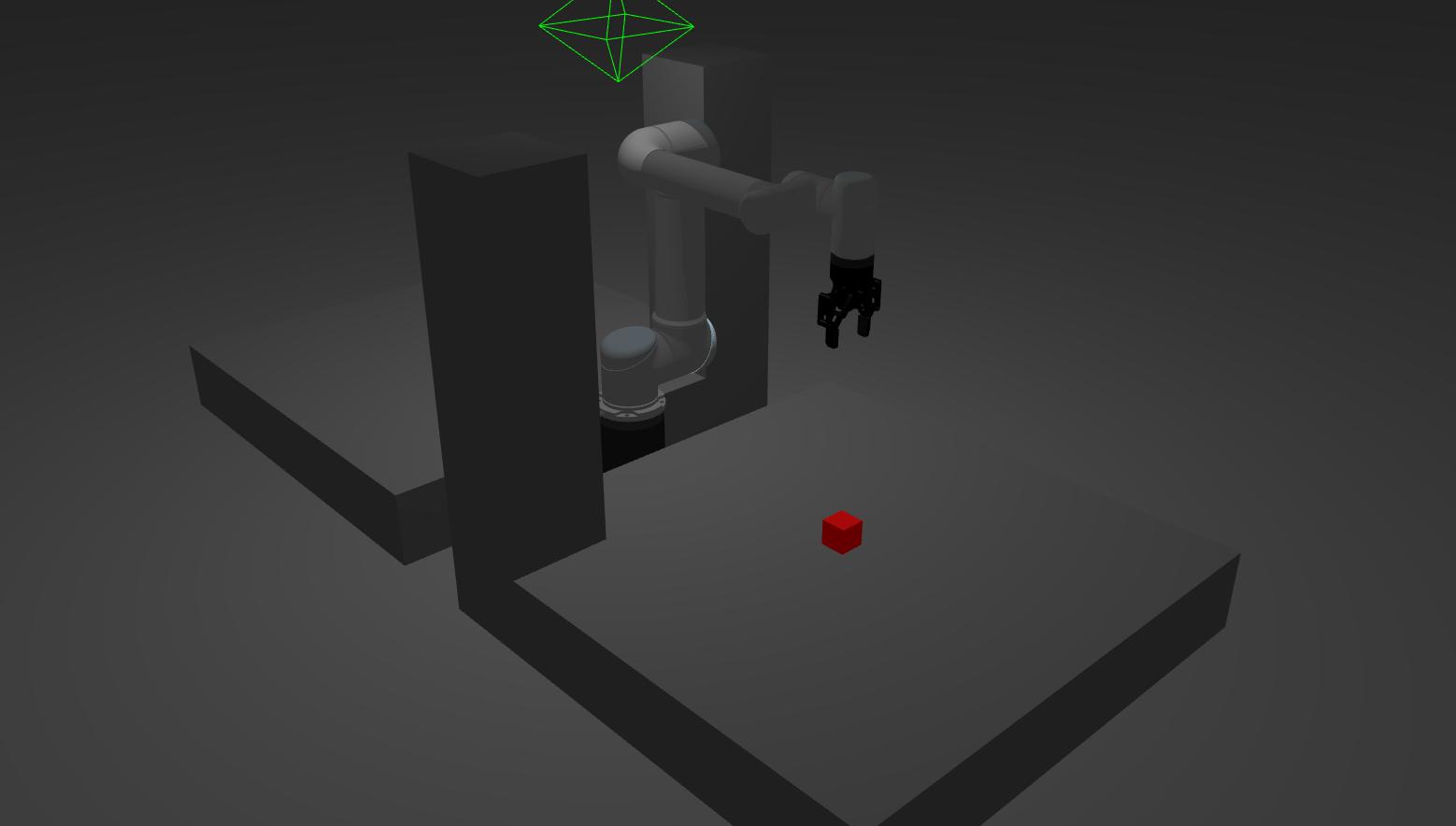

This repository initialises a UR5 robot with a Robotiq 85 Gripper attachment. It performs a pick-and-place task in a workspace with obstacles. Environment is registered and recognised using octomap.

- Ubuntu 20.04

- ROS Noetic Ninjemys

- MoveIt! Motion Planning Framework

- Gazebo 11

Install prerequisite packages

sudo apt install ros-noetic-ros-numpy ros-noetic-ros-controllers ros-noetic-ros-control ros-noetic-cv-bridge ros-noetic-image-proc ros-noetic-image-pipelineInitialise the catkin workspace:

mkdir -p ~/catkin_ws/src

cd ~/catkin_ws/srcClone prerequisite repositories:

# Clone this repository

git clone https://github.com/juniorsundar/ur5_gripper_control.git

# Cloning robot and gripper description files

git clone -b melodic-devel https://github.com/ros-industrial/universal_robot.git

git clone https://github.com/filesmuggler/robotiq.git

# Cloning Realsense Gazebo Plugin

git clone https://github.com/issaiass/realsense_gazebo_plugin.gitBuild catkin workspace:

cd ~/catkin_ws

catkin_make

source devel/setup.bashTo launch the robot and environment, and run the pick-and-place operation:

roslaunch ur5_gripper_control simulation.launchThe nuances of how this works are explained below.

- UR5

- Robotiq 85 Gripper

- Raised Pedestal (20cm) as Base

refer to: urdf/ur5_robotiq85_gripper.urdf.xacro

- Start and End Platform (1m x 1m x 20cm)

- Pillars at Corner of Platforms (20cm x 20cm x 75cm)

- Red Cube (5cm x 5cm x 5cm) (target of pick-and-place operation)

refer to: worlds/world.world

Since planning is being managed by the MoveIt! Motion Planning Framework, we can rely on the Octomap plugin integrated into it. The benefit of this method is that it automatically removes the robot model from the octomap.

To facilitate the octomap generation, we need a pointcloud. On that note, due to the spread-out nature of the workspace, two perception sources are required - one facing each of the platforms.

This repository comes with both stereo cameras and infrared depth cameras. The left and right camera feeds from the stereo cameras are processed through the image_proc ROS package and outputs PointCloud2 depth data.

You can access the depth pointclouds calculated from stereo cameras by accessing:

/camera_1_stereo/points2- sensor_msgs/PointCloud2/camera_2_stereo/points2- sensor_msgs/PointCloud2when running the simulation.

However, it was found to be insufficiently accurate. An alternative is to use an infrared depth sensor that publishes a PointCloud2 directly.

You can access the depth pointclouds from infrared depth cameras by accessing:

/camera_1_depth/depth/color/points- sensor_msgs/PointCloud2/camera_1_depth/depth/color/points- sensor_msgs/PointCloud2when running the simulation.

For this simulation, we are relying on the infrared depth cameras for their pointclouds. MoveIt! offers pointcloud compatibility using the occupancy_map_monitor/PointCloudOctomapUpdater sensor plugin (refer to config/sensors_3d.yaml). This plugin takes in PointCloud2 and updates the octree.

Since the octomap covers everything in the environment, it also includes the block. We don't want this because then the anticipated collision will not allow for the motion plan to succeed. This can be addressed by filtering out the red coloured points from the PointCloud2. This is programmed as a rosservice that publishes the filtered PointCloud2 directly to the octomap.

refer to: scripts/publish_octomap_cloud.py

Motion planning is performed through MoveIt! as explained before. Refer to: scripts/pick_place.py.

The general methodology for planning and execution is:

- Clear octomap to empty previously recognised planning scene.

- Update octomap with filtered PointCloud2s from cameras.

- Plan using

MoveGroupCommander. - Execute plan (fallback to verify if plan was successful).

This repository depends on: